DynamoDB Accelerator (DAX): Complete Guide for 2025

DynamoDB Accelerator (DAX): Complete Guide for 2025

Amazon DynamoDB Accelerator (DAX) is a fully managed, highly available in-memory cache designed specifically for DynamoDB. By integrating DAX into your application, you can achieve microsecond response times for read operations, significantly improving performance for read-intensive workloads while reducing costs. This comprehensive guide explains what DAX is, how it works, and how to implement it effectively in your applications.

Table of Contents

- What is DynamoDB Accelerator (DAX)?

- How DAX Works

- DAX Architecture

- Performance Benefits

- Implementing DAX

- Cache Strategies and Patterns

- Monitoring and Optimizing DAX

- Cost Considerations

- DAX vs Alternative Caching Solutions

- Use Cases

- Limitations and Considerations

- Best Practices

- Conclusion

What is DynamoDB Accelerator (DAX)?

Amazon DynamoDB Accelerator (DAX) is an in-memory caching service specifically designed for DynamoDB tables. Introduced by AWS in 2017, DAX serves as a write-through caching layer that sits between your application and DynamoDB, dramatically improving read performance by caching frequently accessed data.

Key characteristics of DAX include:

- Fully Managed Service: AWS handles all aspects of cluster deployment, maintenance, and scaling.

- In-Memory Cache: Stores data in memory for ultra-fast retrieval.

- API-Compatible: Uses the same DynamoDB API, minimizing code changes required for implementation.

- Write-Through Caching: Automatically updates the cache when data is written to DynamoDB.

- Microsecond Latency: Reduces response times from milliseconds to microseconds.

- High Availability: Multi-AZ deployment with primary and replica nodes.

- Scalable: Supports clusters of up to 10 nodes for increased throughput.

When to Use DAX

DAX is particularly valuable for:

- Read-Intensive Applications: Where the same data is repeatedly read

- Latency-Sensitive Workloads: Applications requiring the fastest possible response times

- Cost Optimization: Reducing DynamoDB read capacity units (RCUs) consumption

- Traffic Spikes: Handling sudden increases in read traffic without affecting DynamoDB performance

- Session Stores: Managing user session data with ultra-low latency

How DAX Works

DAX employs two distinct cache mechanisms working together:

1. Item Cache

The item cache stores the results of individual GetItem and BatchGetItem operations. When your application requests a specific item by its primary key, DAX first checks if that item exists in the cache:

- If the item is in the cache and not expired (cache hit), DAX returns it immediately without accessing DynamoDB.

- If the item is not in the cache (cache miss), DAX retrieves it from DynamoDB, stores it in the cache, and then returns it to the application.

The item cache uses the primary key of the requested items as the cache key.

Switching from Dynobase? Try Dynomate

Developers are switching to Dynomate for these key advantages:

Better Multi-Profile Support

- Native AWS SSO integration

- Seamless profile switching

- Multiple accounts in a single view

Developer-Focused Workflow

- Script-like operation collections

- Chain data between operations

- Full AWS API logging for debugging

Team Collaboration

- Git-friendly collection sharing

- No account required for installation

- Local-first data storage for privacy

Privacy & Security

- No account creation required

- 100% local data storage

- No telemetry or usage tracking

2. Query Cache

The query cache stores the results of Query and Scan operations. When your application executes a Query or Scan:

- If the identical Query or Scan has been executed before and the results are still in the cache (cache hit), DAX returns the cached results.

- If the Query or Scan hasn’t been executed before or the results have expired (cache miss), DAX forwards the operation to DynamoDB, caches the results, and returns them to the application.

The query cache uses a hash of the query parameters (table name, index name, key conditions, filter expressions, etc.) as the cache key.

Write Operations

DAX functions as a write-through cache:

- When your application writes data (using PutItem, UpdateItem, DeleteItem, etc.), the request goes directly to DynamoDB.

- After DynamoDB acknowledges the successful write, DAX updates its cache to reflect the change.

- This ensures that subsequent reads will get the most recent version of the data.

Cache Consistency Model

DAX maintains eventual consistency with DynamoDB:

- Write-Through: Changes made through DAX are immediately reflected in both DAX and DynamoDB.

- Cache Invalidation: DAX does not proactively monitor or pull updates from DynamoDB.

- TTL-Based Expiry: Items in the cache expire based on the configured time-to-live (TTL) setting.

- Eventual Consistency: The DAX cache might not reflect changes made directly to DynamoDB (bypassing DAX) until the cached items expire.

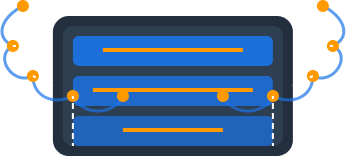

DAX Architecture

DAX is designed for high availability and scalability while maintaining simplicity of management.

Cluster Configuration

A DAX cluster consists of:

- Primary Node: Handles both read and write operations

- Replica Nodes: Handle read operations only (0-9 replicas allowed)

- Node Type: Determines the memory and compute capacity (e.g., dax.r4.large)

- Subnet Group: Defines which VPC subnets DAX uses

- Parameter Group: Controls cache behavior settings like TTL

High Availability

DAX provides high availability through:

- Multi-AZ Deployment: Nodes are distributed across Availability Zones

- Automatic Failover: If the primary node fails, a replica is promoted to primary

- Health Checks: Continuous monitoring of node health

- Self-Healing: Automatic replacement of failed nodes

Network Architecture

- VPC Integration: DAX clusters run within your Amazon VPC

- Endpoint: Each cluster has a discovery endpoint for client connections

- Port: Uses port 8111 by default for communication

- Security Groups: Control access to the DAX cluster

Diagram of DAX Architecture

┌───────────────────────────────────────────────────────────────────────┐

│ Your VPC │

│ │

│ ┌─Application───┐ ┌─DAX Cluster──────────────────────────┐ │

│ │ │ │ │ │

│ │ ┌─────────┐ │ │ ┌─Primary─┐ ┌─Replica─┐ │ │

│ │ │ App │ │ │ │ │ │ │ │ │

│ │ │ Server ├──┼─────┼──►│ Node │◄───┤ Node │ │ │

│ │ └─────────┘ │ │ │ │ │ │ │ │

│ │ │ │ └─────────┘ └─────────┘ │ │

│ │ ┌─────────┐ │ │ ▲ ▲ │ │

│ │ │ App │ │ │ │ │ │ │

│ │ │ Server ├──┼─────┼────────┘ │ │ │

│ │ └─────────┘ │ │ │ │ │

│ └───────────────┘ │ ┌─Replica─┐ │ │ │

│ │ │ │ │ │ │

│ │ │ Node │◄──────┘ │ │

│ │ │ │ │ │

│ │ └─────────┘ │ │

│ └──────────────┬───────────────────────┘ │

│ │ │

│ ▼ │

│ ┌──────────────────────────────┐ │

│ │ │ │

│ │ DynamoDB Tables │ │

│ │ │ │

│ └──────────────────────────────┘ │

└───────────────────────────────────────────────────────────────────────┘Cache Behavior

- TTL: Default is 5 minutes, configurable via parameter group

- LRU Policy: Least Recently Used items are evicted when the cache is full

- Item Expiration: Items automatically expire after the TTL period

- Cache Warming: DAX starts with an empty cache (cold start)

Performance Benefits

The primary benefit of DAX is the dramatic improvement in read performance:

Latency Improvement

- Without DAX: Single-digit millisecond latency (typically 1-10 ms)

- With DAX: Microsecond latency (typically 100-400 μs)

- Improvement Factor: Up to 10x or more for cached reads

Throughput Enhancement

- High Cache Hit Ratio: Significantly reduces the number of requests to DynamoDB

- Request Coalescing: Multiple identical requests can be served by a single cache entry

- Reduced Throttling: Fewer DynamoDB requests means less chance of throttling

- Consistent Performance: More predictable response times, even during traffic spikes

Real-World Performance Examples

| Operation | Without DAX | With DAX (Cache Hit) | Improvement Factor |

|---|---|---|---|

| GetItem | ~3 ms | ~300 μs | 10x |

| Query | ~5 ms | ~400 μs | 12.5x |

| Scan | ~100 ms | ~2 ms | 50x |

Note: Actual performance will vary based on specific workloads, item sizes, and network conditions.

Implementing DAX

Integrating DAX with your application involves several steps:

1. Creating a DAX Cluster

You can create a DAX cluster using the AWS Management Console, AWS CLI, or AWS CloudFormation:

Using AWS CLI:

aws dax create-cluster \

--cluster-name MyDAXCluster \

--node-type dax.r5.large \

--replication-factor 3 \

--iam-role-arn arn:aws:iam::123456789012:role/DAXServiceRole \

--subnet-group default \

--security-groups sg-12345678 \

--description "DAX cluster for my DynamoDB application"Required Parameters:

--cluster-name: A unique name for your DAX cluster--node-type: The compute and memory capacity for each node--replication-factor: Number of nodes in the cluster (1-10)--iam-role-arn: IAM role that allows DAX to access DynamoDB

2. Setting Up IAM Permissions

DAX requires specific IAM permissions:

Service Role for DAX:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"dynamodb:DescribeTable",

"dynamodb:PutItem",

"dynamodb:GetItem",

"dynamodb:UpdateItem",

"dynamodb:DeleteItem",

"dynamodb:Query",

"dynamodb:Scan",

"dynamodb:BatchGetItem",

"dynamodb:BatchWriteItem",

"dynamodb:ConditionCheckItem"

],

"Resource": [

"arn:aws:dynamodb:region:account-id:table/TableName",

"arn:aws:dynamodb:region:account-id:table/TableName/index/*"

]

}

]

}Client Role for Applications:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"dax:GetItem",

"dax:PutItem",

"dax:ConditionCheckItem",

"dax:BatchGetItem",

"dax:BatchWriteItem",

"dax:DeleteItem",

"dax:Query",

"dax:UpdateItem",

"dax:Scan"

],

"Resource": "arn:aws:dax:region:account-id:cache/MyDAXCluster"

}

]

}3. Modifying Your Application Code

Integrating DAX requires minimal code changes thanks to API compatibility:

Node.js Example:

// Without DAX

const AWS = require('aws-sdk');

const dynamodb = new AWS.DynamoDB.DocumentClient();

// With DAX

const AmazonDaxClient = require('amazon-dax-client');

const dax = new AmazonDaxClient({

endpoints: ['dax-cluster-endpoint:8111'],

region: 'us-west-2'

});

const daxClient = new AWS.DynamoDB.DocumentClient({ service: dax });

// Then use daxClient exactly as you would use the regular dynamodb client

async function getItem(id) {

const params = {

TableName: 'MyTable',

Key: { 'ID': id }

};

// This request will now go through DAX

const result = await daxClient.get(params).promise();

return result.Item;

}Java Example:

// Without DAX

AmazonDynamoDB dynamoDbClient = AmazonDynamoDBClientBuilder.standard()

.withRegion(Regions.US_WEST_2)

.build();

DynamoDBMapper mapper = new DynamoDBMapper(dynamoDbClient);

// With DAX

AmazonDaxClientBuilder daxClientBuilder = AmazonDaxClientBuilder.standard();

daxClientBuilder.withRegion(Regions.US_WEST_2)

.withEndpointConfiguration("dax-cluster-endpoint:8111");

AmazonDynamoDB daxClient = daxClientBuilder.build();

DynamoDBMapper daxMapper = new DynamoDBMapper(daxClient);

// Then use daxMapper exactly as you would use the regular mapper4. Testing DAX Integration

After implementation, verify that your application is correctly using DAX:

- Monitor Cache Hit Ratio: Check CloudWatch metrics for CacheHitRate

- Verify Latency Improvement: Measure and compare response times

- Test Failover Scenarios: Ensure your application handles node failures correctly

- Load Testing: Validate performance under expected and peak loads

Cache Strategies and Patterns

Effective DAX usage involves thoughtful cache strategies:

Cache-Aside Pattern

While DAX automatically implements a write-through cache, sometimes you might want to explicitly manage cache operations:

async function getDataWithCacheAside(id) {

try {

// Try to get from cache first

const result = await daxClient.get({

TableName: 'MyTable',

Key: { 'ID': id }

}).promise();

if (result.Item) {

return result.Item;

}

// If not in cache, get from DynamoDB directly

const dbResult = await dynamodbClient.get({

TableName: 'MyTable',

Key: { 'ID': id }

}).promise();

if (dbResult.Item) {

// Explicitly put in cache

await daxClient.put({

TableName: 'MyTable',

Item: dbResult.Item

}).promise();

return dbResult.Item;

}

return null;

} catch (error) {

console.error('Error:', error);

// On error, fall back to direct DynamoDB access

const dbResult = await dynamodbClient.get({

TableName: 'MyTable',

Key: { 'ID': id }

}).promise();

return dbResult.Item || null;

}

}Write-Around Pattern

In some scenarios, you might want to write data directly to DynamoDB, bypassing DAX:

async function writeAround(item) {

// Write directly to DynamoDB

await dynamodbClient.put({

TableName: 'MyTable',

Item: item

}).promise();

// Invalidate the cache by writing a special marker

await daxClient.put({

TableName: 'MyTable',

Item: {

ID: item.ID,

_cacheMarker: 'INVALIDATED',

_invalidatedAt: Date.now()

}

}).promise();

}Read-Through Only

For read-heavy workloads with infrequent writes, you might want to use DAX only for reads:

// Read using DAX

async function readWithDAX(id) {

const result = await daxClient.get({

TableName: 'MyTable',

Key: { 'ID': id }

}).promise();

return result.Item;

}

// Write directly to DynamoDB

async function writeDirectly(item) {

await dynamodbClient.put({

TableName: 'MyTable',

Item: item

}).promise();

}Monitoring and Optimizing DAX

Key Metrics to Monitor

DAX exposes several important CloudWatch metrics:

-

Cache Performance Metrics:

ItemCacheHitsandItemCacheMissesQueryCacheHitsandQueryCacheMissesCacheHitRate(percentage of successful cache lookups)

-

Operational Metrics:

CPUUtilizationNetworkBytesInandNetworkBytesOutEvictedSize(amount of data removed from the cache due to LRU policy)MemoryUtilization

-

Error Metrics:

ErrorRequestCountFaultRequestCountFailedRequestCount

Setting Up CloudWatch Alarms

Set up alarms for critical metrics:

- Low Cache Hit Rate: Alarm when CacheHitRate drops below a threshold

- High CPU Utilization: Alarm when CPUUtilization exceeds 70-80%

- High Memory Utilization: Alarm when MemoryUtilization approaches 100%

- Error Spikes: Alarm on unexpected increases in error metrics

Optimizing DAX Performance

-

Right-Size Your Cluster:

- Choose an appropriate node type based on your memory requirements

- Scale horizontally by adding nodes for higher throughput

- Monitor memory utilization to avoid cache eviction

-

Tune TTL Settings:

- Default item cache TTL: 5 minutes

- Default query cache TTL: 5 minutes

- Adjust based on your data update frequency and consistency requirements

-

Optimize Client Configuration:

- Connection pooling

- Retry policy

- Timeout settings

-

Access Pattern Considerations:

- Design for cache efficiency (consistent parameter order in queries)

- Be aware of cache key generation for queries

- Minimize scan operations

Cost Considerations

DAX Pricing Model

DAX pricing has several components:

- Node Hourly Rate: Based on the node type (e.g., dax.r5.large)

- Data Transfer: Cost for data transferred out of DAX

- Reserved Nodes: Discounted pricing for 1 or a3-year commitments

As of 2025, approximate costs (US regions):

- dax.t3.small:

$0.07 per hour ($51 per month) - dax.r5.large:

$0.32 per hour ($230 per month) - dax.r5.24xlarge:

$7.68 per hour ($5,530 per month)

Cost Optimization Strategies

-

Right-Size Your Cluster:

- Start with a smaller node type and scale up as needed

- Monitor memory utilization to determine optimal size

-

Optimize Node Count:

- Single node for development/testing environments

- Minimum of three nodes for production (for high availability)

- Add nodes based on throughput requirements

-

Reserved Nodes:

- Use reserved nodes for predictable, steady-state workloads

- Save up to 60% compared to on-demand pricing

-

Reduce DynamoDB Costs:

- DAX reduces DynamoDB read costs by serving from cache

- Calculate ROI by comparing DAX cost vs. DynamoDB RCU savings

ROI Calculation Example

Let’s calculate the ROI of DAX for a read-heavy application:

Scenario:

- 10 million GetItem operations per day

- 90% cache hit rate with DAX

- Using provisioned capacity

Without DAX:

- 10 million reads per day = ~116 RCUs

- Cost: 116 RCUs × $0.00013 per RCU-hour × 24 hours × 30 days = ~$108/month

With DAX:

- 1 million reads to DynamoDB (10% cache miss) = ~12 RCUs

- Cost: 12 RCUs × $0.00013 per RCU-hour × 24 hours × 30 days = ~$11/month

- Plus: 3× dax.t3.small nodes = ~$153/month

- Total: ~$164/month

In this case, the direct cost is higher with DAX, but the performance benefits (microsecond latency) might justify the additional expense. For larger workloads, DAX often results in direct cost savings as well.

DAX vs Alternative Caching Solutions

DAX vs ElastiCache (Redis)

| Feature | DAX | ElastiCache (Redis) |

|---|---|---|

| Purpose | DynamoDB-specific cache | General-purpose cache |

| API Compatibility | DynamoDB API | Redis API |

| Implementation Effort | Minimal code changes | Custom cache logic needed |

| Consistency | Automatic write-through | Manual cache management |

| Data Types | DynamoDB items | Rich data structures |

| Expiration | TTL-based | TTL with more granular control |

| Query Caching | Built-in | Must be implemented |

| Use Cases | DynamoDB acceleration | Broader caching needs |

DAX vs Application-Level Caching

| Feature | DAX | Application Cache (e.g., Node.js in-memory) |

|---|---|---|

| Scalability | Cluster-based | Limited to application memory |

| Persistence | Survives application restarts | Lost on restart |

| Shared Cache | Across all application instances | Per-instance only |

| Management | Fully managed | Self-managed |

| Cost | Additional service cost | No direct cost |

| Implementation | SDK integration | Custom code |

When to Choose Each Solution

-

Choose DAX when:

- You’re primarily accelerating DynamoDB access

- You want minimal code changes

- You need a shared cache across multiple application instances

- You prefer a fully managed solution

-

Choose ElastiCache when:

- You need more complex caching operations

- You’re caching data from multiple sources

- You require advanced data structures (sorted sets, etc.)

- You need more control over expiration policies

-

Choose Application-Level Caching when:

- You have very small datasets

- You want to avoid additional AWS services

- You’re optimizing for minimal latency

- Your caching needs are simple

Use Cases

E-Commerce Product Catalog

Challenge: A large e-commerce platform needed to display product details with sub-second response times, even during peak shopping events like Black Friday.

Solution: Implementing DAX allowed them to cache frequently accessed product information, reducing average response time from 5ms to 300μs.

Results:

- 95% cache hit rate during normal operation

- Sustained performance during 10x traffic spikes

- 80% reduction in DynamoDB read costs

- Improved customer experience with faster page loads

Gaming Leaderboards

Challenge: A mobile game company needed to display real-time global and regional leaderboards with minimal latency.

Solution: They used DAX to cache leaderboard queries, which significantly reduced the load on DynamoDB and improved response times.

Results:

- Leaderboard queries served in under 1ms

- Supported 50+ million daily active users

- Maintained performance consistency across global regions

- Reduced DynamoDB capacity by 70%

Session Storage

Challenge: A web application needed to store and retrieve session data for millions of concurrent users with minimal latency.

Solution: Using DAX as a session store provided microsecond access to session data while ensuring durability through the underlying DynamoDB table.

Results:

- Session data retrieval in ~200μs

- Seamless handling of session updates

- Automatic scaling during usage spikes

- Simplified architecture with one solution for both caching and persistence

IoT Data Processing

Challenge: An IoT platform needed to process and analyze sensor data from millions of devices while providing quick access to device status and history.

Solution: DAX was used to cache recent device states and commonly requested time-series data.

Results:

- Device state queries served in microseconds

- Supported 10x growth in connected devices

- Reduced DynamoDB RCU requirements by 65%

- Maintained performance during regional failovers

Limitations and Considerations

While DAX offers significant benefits, it’s important to understand its limitations:

Consistency Considerations

- Eventual Consistency Only: DAX only supports eventually consistent reads, not strongly consistent reads.

- TTL-Based Consistency: The cache consistency model relies on TTL expiration.

- Direct DynamoDB Updates: If you update DynamoDB directly (bypassing DAX), the DAX cache won’t be automatically updated until TTL expiration.

- Global Tables: DAX doesn’t automatically maintain consistency with DynamoDB Global Tables replicas in other regions.

Functional Limitations

-

API Coverage: DAX supports most but not all DynamoDB API calls. For example, it doesn’t support:

TransactWriteItemsandTransactGetItemsoperationsPartiQLoperations- DynamoDB Streams operations

-

Item Size: DAX has the same 400KB item size limit as DynamoDB.

-

VPC Requirement: DAX clusters must be deployed within a VPC.

-

Limited Customization: Limited ability to tune cache parameters compared to general-purpose caches.

Operational Considerations

- Cold Start: New DAX clusters start with empty caches.

- Cache Warming: No built-in mechanism for pre-populating the cache.

- No Cross-Region Replication: Each region requires its own DAX cluster.

- Node Failures: While automatic failover works well, there might be brief unavailability during failover.

- Scaling: Adding or removing nodes causes some cache misses during rebalancing.

Best Practices

Architectural Best Practices

-

Multi-AZ Deployment:

- Deploy DAX with at least three nodes across different Availability Zones for high availability.

-

Separate Cache for Environments:

- Use separate DAX clusters for development, testing, and production environments.

-

Client-Side Fallback:

- Implement fallback logic to access DynamoDB directly if DAX is unavailable.

-

Connectivity:

- Place application servers in the same VPC as the DAX cluster to minimize latency.

- Use private subnets and security groups to protect DAX access.

Performance Best Practices

-

TTL Optimization:

- Set TTL values based on data change frequency and consistency requirements.

- Use shorter TTLs for frequently changing data.

- Use longer TTLs for relatively static data.

-

Query Design:

- Design consistent queries to improve cache hit rates.

- Be mindful that slight variations in query parameters result in different cache entries.

-

Node Sizing:

- Choose node types based on working set size (amount of frequently accessed data).

- Ensure sufficient memory to avoid excessive cache eviction.

-

Scaling Strategy:

- Monitor cluster metrics and scale proactively before performance degradation.

- Add nodes to increase throughput and resilience.

- Upgrade node types to increase memory capacity.

Implementation Best Practices

-

Error Handling:

- Implement robust error handling for DAX connectivity issues.

- Use circuit breakers to prevent cascading failures.

-

Connection Management:

- Reuse DAX client instances across your application.

- Implement connection pooling for optimal performance.

-

Monitoring and Alerting:

- Set up comprehensive CloudWatch alarms for DAX metrics.

- Monitor cache hit rate to ensure effectiveness.

- Alert on error rate increases or memory pressure.

-

Testing Strategy:

- Load test with expected and peak traffic patterns.

- Test failover scenarios to ensure resilience.

- Verify application behavior during DAX scaling events.

Conclusion

Amazon DynamoDB Accelerator (DAX) offers a powerful solution for applications requiring high-performance access to DynamoDB data. By providing microsecond latency for read operations, DAX can dramatically improve application responsiveness while potentially reducing costs through decreased DynamoDB read capacity requirements.

Key takeaways from this guide:

-

Performance Improvement: DAX can reduce read latency from milliseconds to microseconds, providing up to 10x or greater performance improvement.

-

Minimal Code Changes: Thanks to its API compatibility with DynamoDB, implementing DAX requires minimal changes to existing applications.

-

Fully Managed: As a fully managed service, DAX eliminates the operational overhead of managing a caching layer.

-

Cost Efficiency: For read-heavy workloads, DAX can reduce DynamoDB costs by serving a significant portion of reads from cache.

-

Use Case Fit: DAX is particularly well-suited for read-intensive, latency-sensitive applications such as e-commerce, gaming, session stores, and real-time dashboards.

-

Considerations: While powerful, DAX has limitations regarding consistency model, API coverage, and operational aspects that should be understood before implementation.

Dynomate: Modern DynamoDB GUI Client

Built for real developer workflows with AWS profile integration, multi-session support, and team collaboration.

No account needed. Install and start using immediately.

- Table browsing across regions

- Flexible query & scan interface

- AWS API logging & debugging

Whether you’re building a new application or optimizing an existing one, DAX provides a compelling option for enhancing DynamoDB performance. By following the best practices outlined in this guide, you can implement DAX effectively and realize substantial benefits in terms of both performance and cost efficiency.