DynamoDB FAQ: Frequently Asked Questions Answered in 2025

Introduction

Amazon DynamoDB is a powerful, fully managed NoSQL database service that provides fast, predictable performance with seamless scalability. However, as with any sophisticated technology, users often have questions about its capabilities, limitations, and best practices.

This comprehensive FAQ addresses the most common questions about DynamoDB, covering everything from basic concepts to advanced usage patterns. Whether you’re new to DynamoDB or an experienced user looking for specific information, this guide provides clear, concise answers to help you make the most of this versatile database service.

General DynamoDB Questions

What type of database is DynamoDB?

DynamoDB is a NoSQL, non-relational database service. Specifically, it’s a key-value and document database that provides single-digit millisecond performance at any scale. Unlike traditional relational databases with fixed schemas, DynamoDB offers a flexible schema model where each item (row) can have different attributes.

Is DynamoDB serverless?

Yes, DynamoDB is fully serverless. As a fully managed service, AWS handles all the underlying infrastructure, server provisioning, patching, and maintenance. You don’t need to worry about server management, capacity planning, or scaling operations—AWS handles all of this automatically. You simply create tables and perform read/write operations while paying only for the throughput and storage you use.

What is the difference between DynamoDB and other NoSQL databases?

The main differences between DynamoDB and other NoSQL databases include:

- Fully managed: Unlike many NoSQL databases (e.g., MongoDB, Cassandra) that require self-hosting or have limited managed options, DynamoDB is completely managed by AWS.

- Serverless: There are no servers to provision or manage.

- Automatic scaling: DynamoDB can scale up or down automatically with no downtime.

- Performance: Provides consistent, single-digit millisecond response times at any scale.

- Pay-as-you-go pricing: You pay only for what you use rather than pre-provisioning capacity.

- Integration: Native integration with other AWS services.

- Limited query flexibility: Compared to some document databases like MongoDB, DynamoDB has more constrained query capabilities focused on key-based access patterns.

For more detailed comparisons, see our articles on DynamoDB vs MongoDB, DynamoDB vs Cassandra, and other database comparisons.

Is DynamoDB open source?

No, DynamoDB is not open source. It’s a proprietary service provided exclusively by Amazon Web Services. However, for local development and testing, AWS provides DynamoDB Local, which is a downloadable version that simulates the DynamoDB service on your computer. For an open-source alternative with similar functionality, you might consider options like Cassandra or ScyllaDB, though they lack the fully managed nature of DynamoDB.

What are the main use cases for DynamoDB?

DynamoDB excels in these common use cases:

- Mobile and web applications: User profiles, session management, and preferences

- Gaming applications: Game state, player data, and leaderboards

- IoT applications: Device data, sensor readings, and telemetry

- High-scale web services: Product catalogs, customer data, and order management

- Microservices architecture: State management for stateless services

- Real-time big data: High-velocity data ingestion with predictable latency

- Serverless applications: Paired with AWS Lambda for true serverless architectures

DynamoDB is particularly well-suited for applications that need high throughput, low-latency responses, and the ability to scale seamlessly.

Technical Capabilities and Limitations

What is the maximum item size in DynamoDB?

The maximum size for a single item in DynamoDB is 400KB, including both attribute names and values. This limit applies to the total size of all attributes in an item. For storing larger objects, a common pattern is to store the large data in Amazon S3 and keep a reference to it in DynamoDB.

Does DynamoDB support transactions?

Yes, DynamoDB supports ACID (Atomicity, Consistency, Isolation, Durability) transactions across one or more tables. Introduced in 2018, DynamoDB transactions allow you to group multiple actions together and submit them as an all-or-nothing operation. This is useful for applications that need to ensure data integrity across multiple items or tables.

There are some limitations to DynamoDB transactions:

- Transactions are limited to 100 items or 4MB of data (whichever is smaller)

- Transactions can span multiple tables but must be within the same AWS region

- Each transactional operation counts as two underlying read/write operations for capacity calculations

What is DynamoDB’s maximum throughput?

DynamoDB doesn’t have a fixed maximum throughput limit at the account level. Instead, throughput is allocated and limited at the table level:

- For on-demand capacity mode, DynamoDB automatically scales to your traffic, with no explicit upper limit (though extremely rapid increases might face some throttling)

- For provisioned capacity mode, the default per-table limits are:

- 40,000 read capacity units (RCUs)

- 40,000 write capacity units (WCUs)

These default limits can be increased by contacting AWS support. With proper key design and table structure, DynamoDB can handle millions of requests per second across an AWS account.

Is DynamoDB eventually or strongly consistent?

DynamoDB offers both consistency models:

- Eventually Consistent Reads (default): Provide higher throughput but might not reflect the results of a recently completed write. Typically, consistency is reached within a second.

- Strongly Consistent Reads: Return the most up-to-date data, reflecting all prior write operations. These consume twice the capacity of eventually consistent reads.

You can choose the consistency model on a per-request basis for most operations. However, certain features like global tables and global secondary indexes only support eventual consistency.

Can DynamoDB be used like a relational database?

While DynamoDB can handle many use cases that traditionally required relational databases, it’s designed with different principles:

- DynamoDB doesn’t support SQL queries, JOINs, or foreign key constraints natively

- There’s no built-in support for complex transactions across multiple tables

- Data is typically denormalized rather than normalized

However, with careful data modeling (especially single-table design), DynamoDB can support complex relationships and hierarchical data. For applications that truly need relational capabilities, you might consider using Amazon RDS or Aurora alongside DynamoDB, each handling the parts of your data model they’re best suited for.

For more information, see our comparison of DynamoDB vs Relational Databases.

Performance and Scaling

How does DynamoDB scale?

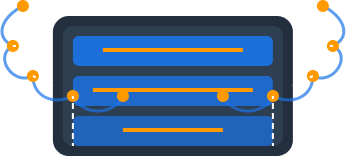

DynamoDB scales automatically in several ways:

- Horizontal partitioning: As your data grows, DynamoDB automatically distributes it across more partitions (storage allocations).

- Throughput scaling:

- With on-demand capacity, DynamoDB automatically accommodates your workloads as they grow or shrink

- With provisioned capacity, you can use auto-scaling to adjust capacity based on utilization

- Global scaling: With global tables, DynamoDB can replicate data across multiple AWS regions for global distribution and disaster recovery

The key to effective scaling is designing your table with a high-cardinality partition key to ensure even distribution of data and requests across partitions.

What happens if I exceed my provisioned capacity?

If your application exceeds the provisioned throughput for a table or index, DynamoDB will throttle those requests. When throttling occurs:

- The operation will fail with a ProvisionedThroughputExceededException

- Throttled reads and writes are delayed and not processed until capacity is available

- These events are logged in CloudWatch metrics

To handle throttling gracefully:

- Implement retry logic with exponential backoff in your application

- Use auto-scaling to adjust capacity automatically

- Consider switching to on-demand capacity for unpredictable workloads

- Improve your data model to distribute traffic more evenly

How can I optimize DynamoDB performance?

To get the best performance from DynamoDB:

- Design efficient keys: Use high-cardinality partition keys to distribute data evenly

- Choose the right operations: Use Query instead of Scan whenever possible

- Implement caching: Use DynamoDB Accelerator (DAX) for read-heavy workloads

- Use smaller items: Keep item sizes small (ideally under 10KB)

- Batch operations: Use BatchGetItem and BatchWriteItem for bulk operations

- Use appropriate indexes: Create global or local secondary indexes for common access patterns

- Apply filter expressions client-side: When possible, filter data in your application rather than using FilterExpressions

- Consider compression: For large text attributes, compress data before storing it

For more optimization tips, see our DynamoDB Best Practices guide.

Data Modeling and Access Patterns

How should I choose a partition key in DynamoDB?

The ideal partition key should:

- Have high cardinality: Many distinct values to distribute data evenly

- Be distributed: Values should be accessed uniformly to avoid hot partitions

- Match access patterns: Support your most frequent and important queries

Good partition keys often include:

- User IDs (when data is primarily accessed by user)

- Order IDs (for order management systems)

- Device IDs (for IoT applications)

- Time-based IDs with some random element (for time-series data)

Poor choices include:

- Status fields (e.g., “pending”, “completed”) which have few distinct values

- Boolean attributes

- Timestamps alone (can create hot partitions)

For more guidance, see our articles on DynamoDB Partition Keys and Choosing Between Partition Key and Sort Key.

What is a sort key used for in DynamoDB?

A sort key (also called a range key) is the second part of a composite primary key. It serves several important purposes:

- Organizing related items: Items with the same partition key are physically stored together, sorted by the sort key

- Enabling range queries: You can query for ranges of sort key values (e.g., all orders for a customer between two dates)

- Creating composite keys: Combining partition and sort keys allows for unique identification of items when the partition key alone isn’t unique

Common sort key patterns include:

- Timestamps (for time-based ordering)

- Hierarchical data (e.g., “department#employee”)

- Version numbers

- Status values

The sort key enables efficient operations like begins_with(), between, >, <, and other comparisons within a partition.

What are secondary indexes in DynamoDB?

Secondary indexes allow you to query your data using keys other than the primary key. DynamoDB supports two types:

-

Global Secondary Index (GSI):

- Can have a different partition key than the base table

- Can be created or deleted at any time

- Has its own throughput settings

- Supports only eventual consistency

-

Local Secondary Index (LSI):

- Must have the same partition key as the base table

- Can only be created when the table is created

- Shares throughput with the base table

- Supports both eventual and strong consistency

Indexes are crucial for supporting multiple access patterns efficiently. Each table can have up to 20 GSIs and 5 LSIs.

For more details, see our article on DynamoDB Indexes.

What is single-table design in DynamoDB?

Single-table design is a DynamoDB data modeling pattern where you store multiple entity types in a single table, rather than creating separate tables for each entity. This approach:

- Leverages DynamoDB’s schemaless nature (different items can have different attributes)

- Uses prefix patterns in key attributes to distinguish between entity types

- Allows for retrieving related entities in a single query

- Reduces the need for joins or multiple queries

While it can be complex to implement initially, single-table design often results in more efficient applications with DynamoDB, especially for complex data relationships. This pattern is particularly valuable when you need to retrieve multiple related entities in a single operation.

Integration and Features

Can DynamoDB trigger Lambda functions?

Yes, DynamoDB can trigger AWS Lambda functions automatically through DynamoDB Streams. When you enable streams on a table, DynamoDB captures a time-ordered sequence of item-level changes (inserts, updates, deletes). You can then configure Lambda to execute in response to these changes.

This enables powerful patterns like:

- Updating derived data or aggregate calculations

- Sending notifications when data changes

- Syncing data to other storage systems

- Implementing custom business logic on data changes

- Building event-driven architectures

Familiar with these Dynamodb Challenges ?

- Writing one‑off scripts for simple DynamoDB operations

- Constantly switching between AWS profiles and regions

- Sharing and managing database operations with your team

You should try Dynomate GUI Client for DynamoDB

- Create collections of operations that work together like scripts

- Seamless integration with AWS SSO and profile switching

- Local‑first design with Git‑friendly sharing for team collaboration

For details on implementation, see our articles on DynamoDB Streams and DynamoDB Triggers.

How do I track changes in DynamoDB?

DynamoDB provides two main mechanisms for tracking changes to your data:

-

DynamoDB Streams:

- Captures item-level modifications (inserts, updates, deletes)

- Stores changes in time-order sequence for up to 24 hours

- Can trigger Lambda functions to process changes

- Supports different view types (keys only, new image, old image, or both)

-

Point-in-Time Recovery (PITR):

- Enables continuous backups of your table

- Allows restoration to any point within the last 35 days

- Useful for recovering from accidental writes or deletes

- Does not provide a change log but allows recovery to previous states

For real-time change processing, DynamoDB Streams is the preferred solution, while PITR provides disaster recovery capabilities.

What is DynamoDB Accelerator (DAX)?

DynamoDB Accelerator (DAX) is an in-memory cache specifically designed for DynamoDB. Key features include:

- Microsecond latency: Reduces response times from milliseconds to microseconds

- API compatibility: Uses the same DynamoDB API, requiring minimal code changes

- Write-through caching: Automatically updates the cache when data is written

- Cluster architecture: Provides high availability and scalability

- Managed service: No servers to manage or maintain

DAX is ideal for read-heavy workloads, especially those with repeated reads of the same items. It can significantly reduce DynamoDB costs by offloading read operations from the base table.

However, DAX only supports eventually consistent reads; strongly consistent reads will bypass the cache and go directly to DynamoDB.

For details on when and how to use DAX, see our DynamoDB Accelerator (DAX) Guide.

Cost and Billing

Is DynamoDB free to use?

Amazon offers a Free Tier for DynamoDB that includes:

- 25 read capacity units (RCUs)

- 25 write capacity units (WCUs)

- 25 GB of storage

This free allocation is sufficient for many small applications or development environments. It’s available to both new and existing AWS customers and doesn’t expire after 12 months like some other AWS Free Tier offerings.

Beyond the Free Tier, DynamoDB charges based on:

- Provisioned throughput (RCUs and WCUs) or on-demand request units

- Storage used (per GB-month)

- Data transfer (for cross-region operations)

- Additional features (backup, global tables, etc.)

For detailed pricing information, see our DynamoDB Free Tier and Pricing Guide.

How can I reduce DynamoDB costs?

To optimize DynamoDB costs:

- Right-size capacity: Monitor usage and adjust provisioned capacity to match actual needs

- Use auto-scaling: Automatically adjust capacity based on actual usage patterns

- Consider on-demand for variable workloads: May be more cost-effective for unpredictable patterns

- Implement TTL: Automatically expire and remove old data you no longer need

- Compress large attributes: Reduce storage costs by compressing text or binary data

- Be selective with indexes: Each index increases storage and write costs

- Monitor with AWS Cost Explorer: Regularly review your usage and costs

- Cache frequent reads: Use DAX or application-level caching to reduce read operations

- Delete unused tables and backups: Don’t pay for resources you’re not using

- Use the Reserved Capacity pricing option: For predictable workloads with long-term commitments

Small optimizations can lead to significant savings, especially as your data and traffic grow.

What happens if I exceed the DynamoDB Free Tier?

If you exceed the DynamoDB Free Tier limits:

- You’ll be charged for the additional usage according to standard DynamoDB pricing

- The excess usage is billed at the normal rates for your region

- There’s no automatic cap or shutdown; your applications will continue to function

- You can set up billing alerts via AWS Budgets to notify you when you approach or exceed the Free Tier

To avoid unexpected charges, consider:

- Setting up CloudWatch alarms to monitor usage

- Implementing throttling in your application

- Using provisioned capacity with auto-scaling to control maximum spend

Operational Questions

How do I backup DynamoDB data?

DynamoDB offers several backup options:

-

On-demand backups:

- Manual, full backups of a table

- Stored until explicitly deleted

- No impact on table performance

- Can be used for long-term archiving

-

Point-in-Time Recovery (PITR):

- Continuous backups capturing every change

- Allows restoration to any point within the last 35 days

- Slightly increases the cost of your table

- Ideal for protection against accidental writes or deletes

-

AWS Backup:

- Centralized backup service that integrates with DynamoDB

- Supports cross-region and cross-account backup copies

- Enables consolidated backup policies and scheduling

-

Custom solutions:

- Use DynamoDB Streams with Lambda to replicate data to S3

- Export data using Data Pipeline or AWS Glue

- Use AWS Data Export to export to S3 in various formats

For most users, enabling PITR provides the best protection against data loss with minimal operational overhead.

Can DynamoDB be used for analytics?

DynamoDB isn’t optimized for analytical workloads, but there are several approaches for analytics:

-

DynamoDB with limited analytics:

- Basic aggregations can be performed with Scan operations

- Suitable only for small datasets due to performance and cost implications

- Limited query flexibility compared to analytical databases

-

Export to purpose-built analytics services:

- Export data to Amazon Redshift for complex analytical queries

- Use Amazon Athena to query DynamoDB exports in S3

- Stream changes to Amazon Elasticsearch Service for text search and analytics

-

Real-time analytics options:

- Use DynamoDB Streams with Lambda to process changes in real-time

- Send data to Amazon Kinesis for real-time analytics

For serious analytical workloads, it’s recommended to periodically export DynamoDB data to a data warehouse like Amazon Redshift or use a pipeline to sync data to an analytics-optimized storage system.

See our comparison of DynamoDB vs Redshift for more information on the appropriate use cases for each service.

How do I monitor DynamoDB performance?

To effectively monitor DynamoDB:

-

CloudWatch Metrics - Key metrics to track include:

- ConsumedReadCapacityUnits and ConsumedWriteCapacityUnits

- ProvisionedReadCapacityUnits and ProvisionedWriteCapacityUnits

- ReadThrottleEvents and WriteThrottleEvents

- SuccessfulRequestLatency

- SystemErrors and UserErrors

-

CloudWatch Alarms - Set up alerts for:

- Capacity approaching provisioned limits

- Throttling events occurring

- High latency

- Error rates increasing

-

AWS X-Ray - For tracing requests through your application stack

-

CloudTrail - For logging API calls and administrative actions

-

Contributor Insights - To identify the most accessed items or most throttled keys

Regular monitoring helps you optimize performance, prevent throttling, and maintain cost efficiency.

Conclusion

Amazon DynamoDB offers a powerful, flexible database solution that scales seamlessly with your application needs. Understanding its capabilities, limitations, and best practices allows you to leverage its full potential while avoiding common pitfalls.

Switching from Dynobase? Try Dynomate

Developers are switching to Dynomate for these key advantages:

Better Multi-Profile Support

- Native AWS SSO integration

- Seamless profile switching

- Multiple accounts in a single view

Developer-Focused Workflow

- Script-like operation collections

- Chain data between operations

- Full AWS API logging for debugging

Team Collaboration

- Git-friendly collection sharing

- No account required for installation

- Local-first data storage for privacy

Privacy & Security

- No account creation required

- 100% local data storage

- No telemetry or usage tracking

If you have additional questions not covered in this FAQ, check out our other DynamoDB resources:

- What is DynamoDB? - A comprehensive introduction

- DynamoDB Tutorial for Beginners - Hands-on guide to get started

- DynamoDB Best Practices - Optimize your implementation

- DynamoDB Free Tier - Understanding the cost structure

For a more intuitive way to work with DynamoDB, try Dynomate - our purpose-built tool that simplifies DynamoDB development, visualization, and management.