DynamoDB Streams Explained: Complete Guide for 2025

DynamoDB Streams Explained: Complete Guide for 2025

Amazon DynamoDB Streams is a powerful feature that captures data modifications in your DynamoDB tables in real-time. It enables you to build reactive, event-driven applications that can respond immediately to data changes. This comprehensive guide explains how DynamoDB Streams work, their benefits, common use cases, implementation patterns, and best practices.

Table of Contents

- What are DynamoDB Streams?

- How DynamoDB Streams Work

- Key Features and Capabilities

- Common Use Cases

- Setting Up DynamoDB Streams

- Consuming Stream Data

- DynamoDB Streams vs. Kinesis Data Streams

- Performance Considerations

- Best Practices

- Limitations and Challenges

- Pricing

- Conclusion

What are DynamoDB Streams?

DynamoDB Streams is a feature that captures a time-ordered sequence of item-level modifications in a DynamoDB table. Simply put, it records the “before” and “after” images of items whenever they are created, updated, or deleted, storing these changes in a dedicated stream that can be processed in real-time.

This capability transforms DynamoDB from a simple database into a powerful event source that can trigger workflows, synchronize data, and enable complex event-driven architectures.

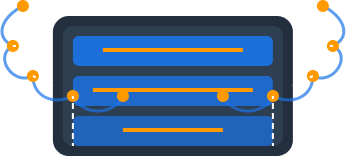

How DynamoDB Streams Work

DynamoDB Streams functions as a change data capture (CDC) system that works as follows:

-

Stream Enablement: You enable a stream on a DynamoDB table, choosing what information to capture (keys only, new images, old images, or both).

-

Change Capture: When items in the table are created, updated, or deleted, these changes are automatically captured in the stream.

-

Record Sequencing: Each stream record receives a sequence number and is grouped into shards (containers for stream records).

-

Record Structure: Each record contains:

- The event type (INSERT, MODIFY, or REMOVE)

- The timestamp of the event

- The primary key of the affected item

- The old and/or new item data (depending on your configuration)

-

Data Retention: Stream records are stored for 24 hours, giving your applications time to process them.

-

Consumption: Applications can read from the stream using the AWS SDK, Lambda functions, or the Kinesis Client Library (KCL).

The following diagram illustrates this process:

┌───────────────┐ 1. Changes ┌─────────────────┐ 2. Triggers ┌───────────────┐

│ DynamoDB │ ───────────────> │ DynamoDB │ ───────────────> │ Consumers │

│ Table │ │ Streams │ │ (Lambda, etc) │

└───────────────┘ └─────────────────┘ └───────────────┘

│ │ │

│ │ │

│ │ │

v v v

┌───────────────┐ ┌─────────────────┐ ┌───────────────┐

│ Create │ │ Stream Records │ │ Process Data │

│ Update │ │ - Event Type │ │ - Replicate │

│ Delete │ │ - Sequence # │ │ - Aggregate │

│ Operations │ │ - Item Data │ │ - Notify │

└───────────────┘ └─────────────────┘ └───────────────┘Key Features and Capabilities

Stream Record Content Options

When enabling a stream, you can choose what information to include in each record:

- Keys Only: Only the key attributes of the modified item

- New Image: The entire item as it appears after modification

- Old Image: The entire item as it appeared before modification

- New and Old Images: Both the new and old versions of the item

The option you choose affects the size of your stream records and determines what kind of processing you can perform.

Guaranteed Ordering and Delivery

DynamoDB Streams provides important guarantees:

- Ordered Delivery: Records appear in the stream in the same sequence as the actual modifications to the table

- Exactly-Once Semantics: Each change appears exactly once in the stream

- Change History: All item modifications are captured (no sampling)

- Partition-Level Ordering: Changes to items with the same partition key maintain their order

These properties make Streams suitable for applications requiring reliable event processing.

Dynomate: Modern DynamoDB GUI Client

Built for real developer workflows with AWS profile integration, multi-session support, and team collaboration.

No account needed. Install and start using immediately.

- Table browsing across regions

- Flexible query & scan interface

- AWS API logging & debugging

Integration with AWS Lambda

DynamoDB Streams integrates natively with AWS Lambda, allowing you to:

- Trigger Lambda functions automatically when items change

- Process stream records in batches for efficiency

- Handle retries automatically for failed invocations

- Scale concurrency based on the number of stream shards

This serverless integration pattern is one of the most common and powerful ways to use DynamoDB Streams.

Flexible Consumption Models

Besides Lambda, you can consume stream data using:

- DynamoDB Streams Kinesis Adapter: Lets you use the Kinesis Client Library (KCL) to read from streams

- AWS SDK: Direct API access for custom applications

- AWS AppSync: For real-time GraphQL applications

- Managed Services: Such as AWS Database Migration Service or AWS AppFlow

This flexibility enables various architectural patterns depending on your requirements.

Common Use Cases

1. Data Replication and Synchronization

One of the most common uses for DynamoDB Streams is replicating data to other storage systems:

- Cross-Region Replication: Replicate data to DynamoDB tables in other AWS regions (although Global Tables now provide this natively)

- Cross-Service Synchronization: Keep data in sync between DynamoDB and other AWS services like Elasticsearch, S3, or RDS

- Caching: Update in-memory caches like Redis or Memcached when data changes

- Data Warehousing: Stream changes to analytics platforms like Redshift or Snowflake

Example architecture for Elasticsearch synchronization:

┌───────────────┐ ┌─────────────────┐ ┌───────────────┐ ┌───────────────┐

│ DynamoDB │ -> │ DynamoDB │ -> │ Lambda │ -> │ Elasticsearch │

│ Table │ │ Streams │ │ Function │ │ Service │

└───────────────┘ └─────────────────┘ └───────────────┘ └───────────────┘2. Event-Driven Processing

Streams enable reactive architectures where systems respond to data changes:

- Notifications: Send emails, SMS, or push notifications when specific items change

- Workflow Triggers: Start business processes when certain data conditions are met

- Auditing: Record who changed what and when for compliance purposes

- Analytics: Update real-time dashboards and metrics

3. Materialized Views and Aggregations

Streams help maintain derived data structures:

- Materialized Views: Create and update pre-computed query results

- Aggregations: Calculate running totals, averages, or other statistics

- Denormalization: Update redundant data across multiple items for query efficiency

- Indexes: Build custom secondary indexes based on attributes not covered by GSIs

4. Cross-Application Communication

Streams can serve as an event bus between microservices:

- Loose Coupling: Services can react to data changes without direct dependencies

- Change Propagation: Notify downstream systems about upstream changes

- Eventually Consistent Operations: Implement background processes triggered by data changes

- Saga Patterns: Coordinate multi-step distributed transactions

Setting Up DynamoDB Streams

Enabling Streams on a New Table

When creating a new DynamoDB table using the AWS SDK:

const AWS = require('aws-sdk');

const dynamoDB = new AWS.DynamoDB();

const params = {

TableName: 'MyTable',

KeySchema: [

{ AttributeName: 'id', KeyType: 'HASH' }

],

AttributeDefinitions: [

{ AttributeName: 'id', AttributeType: 'S' }

],

ProvisionedThroughput: {

ReadCapacityUnits: 5,

WriteCapacityUnits: 5

},

StreamSpecification: {

StreamEnabled: true,

StreamViewType: 'NEW_AND_OLD_IMAGES'

}

};

dynamoDB.createTable(params, (err, data) => {

if (err) console.error(err);

else console.log('Table created with streams enabled:', data);

});Enabling Streams on an Existing Table

To enable streams on an existing table:

const params = {

TableName: 'MyTable',

StreamSpecification: {

StreamEnabled: true,

StreamViewType: 'NEW_AND_OLD_IMAGES'

}

};

dynamoDB.updateTable(params, (err, data) => {

if (err) console.error(err);

else console.log('Streams enabled on existing table:', data);

});Using AWS Console

You can also enable streams through the AWS Management Console:

- Navigate to the DynamoDB service

- Select the table you want to modify

- Choose the “Exports and streams” tab

- In the “DynamoDB stream details” section, click “Enable”

- Select your desired view type (Keys only, New image, Old image, or New and old images)

- Click “Enable stream”

Consuming Stream Data

Lambda Integration

The most straightforward way to consume DynamoDB Streams is with AWS Lambda:

1. Create a Lambda Function

exports.handler = async (event) => {

console.log('Received event:', JSON.stringify(event, null, 2));

for (const record of event.Records) {

console.log('Event ID:', record.eventID);

console.log('Event Name:', record.eventName);

if (record.eventName === 'INSERT') {

console.log('New item:', JSON.stringify(record.dynamodb.NewImage));

// Process new item...

} else if (record.eventName === 'MODIFY') {

console.log('Old item:', JSON.stringify(record.dynamodb.OldImage));

console.log('New item:', JSON.stringify(record.dynamodb.NewImage));

// Compare and process changes...

} else if (record.eventName === 'REMOVE') {

console.log('Deleted item:', JSON.stringify(record.dynamodb.OldImage));

// Process deletion...

}

}

return { status: 'success' };

};2. Create an Event Source Mapping

Using the AWS CLI:

aws lambda create-event-source-mapping \

--function-name MyStreamProcessor \

--event-source-arn arn:aws:dynamodb:us-east-1:123456789012:table/MyTable/stream/2024-01-01T00:00:00.000 \

--batch-size 100 \

--starting-position LATESTOr using the AWS SDK:

const AWS = require('aws-sdk');

const lambda = new AWS.Lambda();

const params = {

FunctionName: 'MyStreamProcessor',

EventSourceArn: 'arn:aws:dynamodb:us-east-1:123456789012:table/MyTable/stream/2024-01-01T00:00:00.000',

BatchSize: 100,

StartingPosition: 'LATEST'

};

lambda.createEventSourceMapping(params, (err, data) => {

if (err) console.error(err);

else console.log('Event source mapping created:', data);

});Using the DynamoDB Streams API Directly

For more control, you can use the DynamoDB Streams API directly:

const AWS = require('aws-sdk');

const dynamodbStreams = new AWS.DynamoDBStreams();

// Step 1: Describe the stream to get shard information

async function processStream(streamArn) {

try {

// Get stream description

const { StreamDescription } = await dynamodbStreams.describeStream({

StreamArn: streamArn

}).promise();

console.log('Stream details:', StreamDescription);

// Process each shard

for (const shard of StreamDescription.Shards) {

await processShard(streamArn, shard.ShardId);

}

} catch (error) {

console.error('Error processing stream:', error);

}

}

// Step 2: Get shard iterator

async function processShard(streamArn, shardId) {

try {

// Get shard iterator

const { ShardIterator } = await dynamodbStreams.getShardIterator({

StreamArn: streamArn,

ShardId: shardId,

ShardIteratorType: 'LATEST'

}).promise();

await getRecords(ShardIterator);

} catch (error) {

console.error(`Error processing shard ${shardId}:`, error);

}

}

// Step 3: Get and process records

async function getRecords(shardIterator) {

try {

const result = await dynamodbStreams.getRecords({

ShardIterator: shardIterator,

Limit: 100

}).promise();

// Process the records

for (const record of result.Records) {

console.log('Processing record:', record);

// Your processing logic here

}

// If there are more records, continue processing

if (result.NextShardIterator) {

// Use setTimeout to avoid hitting API limits

setTimeout(() => {

getRecords(result.NextShardIterator);

}, 1000);

}

} catch (error) {

console.error('Error getting records:', error);

}

}

// Start processing

processStream('arn:aws:dynamodb:us-east-1:123456789012:table/MyTable/stream/2024-01-01T00:00:00.000');Familiar with these Dynamodb Challenges ?

- Writing one‑off scripts for simple DynamoDB operations

- Constantly switching between AWS profiles and regions

- Sharing and managing database operations with your team

You should try Dynomate GUI Client for DynamoDB

- Create collections of operations that work together like scripts

- Seamless integration with AWS SSO and profile switching

- Local‑first design with Git‑friendly sharing for team collaboration

Using the Kinesis Client Library (KCL)

For more advanced consumption patterns, you can use the DynamoDB Streams Kinesis Adapter with the Kinesis Client Library:

public class StreamsRecordProcessor implements IRecordProcessor {

@Override

public void initialize(InitializationInput initializationInput) {

System.out.println("Initializing record processor for shard: " +

initializationInput.getShardId());

}

@Override

public void processRecords(ProcessRecordsInput processRecordsInput) {

for (Record record : processRecordsInput.getRecords()) {

try {

// Decode the record data

ByteBuffer data = record.getData();

JsonNode node = OBJECT_MAPPER.readTree(data.array());

// Extract dynamodb data

JsonNode dynamodb = node.get("dynamodb");

String eventName = node.get("eventName").asText();

// Process based on event type

if ("INSERT".equals(eventName) || "MODIFY".equals(eventName)) {

JsonNode newImage = dynamodb.get("NewImage");

// Process new/modified item...

} else if ("REMOVE".equals(eventName)) {

JsonNode oldImage = dynamodb.get("OldImage");

// Process deleted item...

}

// Checkpoint to indicate successful processing

processRecordsInput.getCheckpointer().checkpoint(record);

} catch (Exception e) {

System.err.println("Error processing record: " + e.getMessage());

}

}

}

@Override

public void shutdown(ShutdownInput shutdownInput) {

System.out.println("Shutting down record processor");

}

}DynamoDB Streams vs. Kinesis Data Streams

In 2020, AWS introduced the ability to stream DynamoDB changes to Kinesis Data Streams, providing an alternative to the native DynamoDB Streams. Here’s how they compare:

| Feature | DynamoDB Streams | Kinesis Data Streams |

|---|---|---|

| Retention Period | 24 hours | Up to 365 days |

| Throughput | Limited by table’s provisioned capacity | Virtually unlimited with on-demand capacity |

| Consumer Count | Limited to 2 concurrent Lambda consumers per shard | Multiple applications can read from the same stream |

| Fan-out | Limited | Enhanced fan-out for high-throughput consumers |

| Integration | Lambda, DynamoDB API | Lambda, KCL, Firehose, Analytics, etc. |

| Resharding | Automatic, not configurable | Manual control over shard splitting/merging |

| Pricing | Included with DynamoDB | Separate pricing based on shard hours and PUT operations |

| Processing Complexity | Simpler for basic use cases | More versatile but potentially more complex |

When to choose DynamoDB Streams:

- For simple Lambda processing of table changes

- When 24-hour retention is sufficient

- When you want the most straightforward setup

- When cost optimization is important

When to choose Kinesis Data Streams:

- For longer retention of change events

- When multiple applications need to process the same events

- For higher throughput requirements

- When integrating with the broader Kinesis ecosystem

- For more control over scaling and processing

Performance Considerations

Throughput and Scaling

DynamoDB Streams scale automatically with your table, but there are some important considerations:

- Shard Limits: Each shard can support up to 1,000 change events per second

- Read Throughput: Each shard allows up to 2 read transactions per second with up to 1MB data

- Lambda Concurrency: Lambda scales to one concurrent execution per shard

- Batch Size: Increasing Lambda batch size improves throughput but increases potential for failed batches

Latency

Streams introduce a small processing delay:

- Propagation Delay: Changes typically appear in the stream within milliseconds

- Processing Delay: Lambda polling adds some latency (typically less than a second)

- Backpressure: If consumers can’t keep up with the stream, processing lag increases

Error Handling

A robust error handling strategy is essential:

- Partial Batch Failures: Lambda retries the entire batch if any record fails processing

- Poison Records: A single malformed record can block the entire shard

- Runtime Errors: Lambda timeouts or memory limits can cause retries

- Retry Limits: After exhausting retries, records are discarded unless using a dead-letter queue

Best Practices

Design Patterns

-

Event Sourcing: Use streams as an immutable log of all data changes

Application → DynamoDB → Streams → Event Log → Projections -

Command-Query Responsibility Segregation (CQRS): Separate write and read models

Writes → DynamoDB → Streams → Read Models (ElasticSearch, etc.) -

Saga Pattern: Coordinate distributed transactions across microservices

Service A → DynamoDB → Streams → Service B → DynamoDB → Streams → Service C -

Fan-out Processing: Use Kinesis Data Streams to enable multiple consumers

DynamoDB → Kinesis Data Streams → Multiple Consumers

Implementation Tips

- Idempotent Processing: Design consumers to handle duplicate events safely

- Checkpointing: Maintain processing state to resume after failures

- Dead-Letter Queues: Capture failed events for analysis and replay

- Monitoring: Set up CloudWatch alarms for iterator age and processing errors

- Testing: Use DynamoDB Local for unit testing stream processors

Stream Record Processing

- Minimal Processing: Keep Lambda functions light and focused

- Parallel Processing: Process different event types in different Lambda functions

- Batching: Aggregate similar operations before updating external systems

- Throttling: Implement rate limiting when integrating with external APIs

Limitations and Challenges

Retention Period

The 24-hour retention limit means:

- Consumers must process records within this window

- Extended outages can result in data loss

- Long-running migrations may be challenging

- Consider Kinesis Data Streams for longer retention needs

Ordering Guarantees

While streams provide ordering within partition keys:

- No ordering guarantees across different partition keys

- Global secondary index updates may appear out of order relative to table updates

- Time synchronization across distributed systems can be challenging

Shard Management

DynamoDB manages shards automatically:

- You cannot control shard count or splitting

- Resharding operations can cause temporary duplicate records

- No ability to specify custom partition keys for shards

Consistency Challenges

Some consistency considerations:

- Stream records reflect eventually consistent table operations

- Transactions may result in multiple stream records that must be processed together

- Downstream systems may temporarily show inconsistent state during processing

Pricing

DynamoDB Streams pricing is relatively straightforward:

- Stream Read Request Units: The first 2.5 million reads from DynamoDB Streams per month are free, after which you pay $0.02 per 100,000 read request units

- Storage: There’s no additional charge for storing the stream data for 24 hours

For larger workloads, Kinesis Data Streams may have different cost implications:

- Separate charges for shards and PUT operations

- Potentially higher but more predictable costs

Switching from Dynobase? Try Dynomate

Developers are switching to Dynomate for these key advantages:

Better Multi-Profile Support

- Native AWS SSO integration

- Seamless profile switching

- Multiple accounts in a single view

Developer-Focused Workflow

- Script-like operation collections

- Chain data between operations

- Full AWS API logging for debugging

Team Collaboration

- Git-friendly collection sharing

- No account required for installation

- Local-first data storage for privacy

Privacy & Security

- No account creation required

- 100% local data storage

- No telemetry or usage tracking

Conclusion

DynamoDB Streams is a powerful capability that transforms DynamoDB from a simple database into an event source for reactive, event-driven architectures. By capturing and processing data changes in real-time, you can build systems that are more responsive, scalable, and maintainable.

Key takeaways:

- DynamoDB Streams provides a reliable, ordered sequence of data changes

- The integration with Lambda enables serverless event processing

- Common use cases include replication, event processing, and maintaining materialized views

- Choose between native DynamoDB Streams and Kinesis Data Streams based on retention, throughput, and integration needs

- Follow best practices for error handling, idempotent processing, and monitoring

Whether you’re building a simple data synchronization system or a complex event-driven microservices architecture, DynamoDB Streams provides the foundation for capturing and responding to data changes in real-time.