DynamoDB Streams vs Kinesis: Choosing the Right AWS Streaming Service

DynamoDB Streams vs Kinesis: Choosing the Right AWS Streaming Service

When building real-time data processing applications on AWS, you might find yourself choosing between Amazon DynamoDB Streams and Amazon Kinesis Data Streams. While both services enable you to capture and process streaming data, they serve different purposes and have distinct characteristics that make each better suited for specific use cases.

This article provides a comprehensive comparison to help you understand when to use DynamoDB Streams, when to use Kinesis Data Streams, and how they might work together in your architecture.

Overview: DynamoDB Streams vs Kinesis at a Glance

| Feature | DynamoDB Streams | Kinesis Data Streams |

|---|---|---|

| Primary Purpose | Capture data changes in DynamoDB | General-purpose data streaming |

| Data Source | DynamoDB tables only | Any custom source |

| Retention Period | 24 hours (fixed) | 24 hours to 365 days (configurable) |

| Scaling | Automatic, based on table activity | Manual shard provisioning or on-demand |

| Ordering | Per-partition key ordering | Per-shard ordering |

| Record Size | Up to 400KB (DynamoDB item size) | Up to 1MB |

| Common Consumers | Lambda, DynamoDB Streams Kinesis Adapter | Lambda, KCL, Firehose, Analytics |

| Pricing Model | No additional cost for Streams, pay for read operations | Pay per shard hour and PUT payload units |

Service Overview: Understanding the Core Differences

DynamoDB Streams

DynamoDB Streams is a feature of Amazon DynamoDB that captures a time-ordered sequence of item-level modifications in any DynamoDB table. When enabled, each change to data items in the table is recorded in the stream in near real-time.

Key characteristics include:

- Tied directly to a DynamoDB table

- Captures item-level changes (inserts, updates, deletes)

- Includes before and after images of modified items

- Automatically scales with the table’s throughput

- Primarily designed for reacting to database changes

Kinesis Data Streams

Amazon Kinesis Data Streams is a standalone service for collecting and processing large streams of data records in real time. It can handle various data sources, not just database changes, making it much more versatile.

Key characteristics include:

- Independent service for general data streaming

- Handles data from many different sources

- Configurable retention and throughput

- Requires explicit provisioning or on-demand capacity

- Designed for broad real-time data processing needs

Data Sources and Capture Models

DynamoDB Streams Data Sources

DynamoDB Streams can only capture data changes from DynamoDB tables. When enabled on a table, it automatically captures:

- New items added to the table (INSERT)

- Updates to existing items (MODIFY)

- Items removed from the table (REMOVE)

For each modification, the stream can include:

- Keys Only: Only the key attributes of the modified item

- New Image: The entire item, as it appears after it was modified

- Old Image: The entire item, as it appeared before it was modified

- New and Old Images: Both the new and the old images of the item

This model is optimized specifically for database change data capture (CDC).

Kinesis Data Streams Data Sources

Kinesis Data Streams can ingest data from virtually any source:

- Application logs and events

- Clickstream data from websites

- IoT device telemetry

- Financial transactions

- Social media feeds

- Custom application events

- Infrastructure metrics

- Output from other AWS services

Data is pushed to Kinesis via the PutRecord or PutRecords API calls, which can be made from any application or service capable of making AWS API calls.

Stream Processing and Consumers

DynamoDB Streams Processing

DynamoDB Streams are typically processed by:

-

AWS Lambda: The most common way to process DynamoDB Streams is through Lambda functions, which can be triggered automatically when new records appear in the stream.

-

DynamoDB Streams Kinesis Adapter: This library allows you to use the Kinesis Client Library (KCL) to consume and process records from DynamoDB Streams.

-

Custom applications: Using the AWS SDK to directly read from DynamoDB Streams.

Example of Lambda trigger configuration:

// Lambda function triggered by DynamoDB Stream

exports.handler = async (event) => {

for (const record of event.Records) {

console.log('DynamoDB Record: %j', record.dynamodb);

// Process based on event type

if (record.eventName === 'INSERT') {

// Handle new item

const newItem = record.dynamodb.NewImage;

// Process new item...

} else if (record.eventName === 'MODIFY') {

// Handle updated item

const oldItem = record.dynamodb.OldImage;

const newItem = record.dynamodb.NewImage;

// Compare and process changes...

} else if (record.eventName === 'REMOVE') {

// Handle deleted item

const oldItem = record.dynamodb.OldImage;

// Process deletion...

}

}

return { status: 'success' };

};Kinesis Data Streams Processing

Kinesis Data Streams offers more processing options:

-

AWS Lambda: Similar to DynamoDB Streams, Lambda functions can process Kinesis stream records.

-

Kinesis Client Library (KCL): A specialized library for building applications that process data from Kinesis.

-

Kinesis Data Analytics: Real-time SQL or Apache Flink applications on streaming data.

-

Kinesis Data Firehose: Automatically load streaming data into destinations like S3, Redshift, Elasticsearch, or Splunk.

-

AWS Glue: Process and transform streaming data for analytics.

-

Apache Spark Streaming: Process Kinesis streams using Spark.

-

Custom consumers: Any application using the AWS SDK.

This broader range of processing options makes Kinesis more versatile for complex streaming architectures.

Dynomate: Modern DynamoDB GUI Client

Built for real developer workflows with AWS profile integration, multi-session support, and team collaboration.

No account needed. Install and start using immediately.

- Table browsing across regions

- Flexible query & scan interface

- AWS API logging & debugging

Scaling and Throughput Considerations

DynamoDB Streams Scaling

DynamoDB Streams scaling is managed automatically:

- Throughput scales in proportion to the underlying DynamoDB table

- No manual shard management required

- Limited to two concurrent consumers per shard

- Throughput implicitly limited by DynamoDB’s write capacity

This simplicity is both an advantage (less to manage) and a limitation (less control).

Kinesis Data Streams Scaling

Kinesis Data Streams offers two capacity modes:

-

Provisioned mode:

- Manually specify the number of shards

- Each shard provides 1MB/sec input, 2MB/sec output

- Up to 1000 records/sec for writes

- You’re responsible for monitoring and adjusting shard count

-

On-demand mode:

- Automatically adjusts capacity based on throughput needs

- No provisioning or management of shards required

- Higher cost per data volume compared to provisioned

This flexibility allows Kinesis to handle very high throughput scenarios but requires more active management in provisioned mode.

Retention and Data Access

DynamoDB Streams Retention

DynamoDB Streams has fixed retention characteristics:

- Records are retained for 24 hours (not configurable)

- After 24 hours, records are automatically removed

- No ability to extend retention period

- Designed for near-real-time processing, not historical analysis

Kinesis Data Streams Retention

Kinesis Data Streams offers flexible retention:

- Default retention period of 24 hours

- Can be extended up to 365 days (for additional cost)

- Longer retention enables replay of historical data

- Supports multiple applications reading from different positions

This flexibility makes Kinesis more suitable for scenarios where you might need to reprocess historical data or have multiple applications consuming at different rates.

Integration with AWS Services

DynamoDB Streams Integrations

DynamoDB Streams integrates primarily with:

- AWS Lambda (native event source)

- Amazon Aurora (for DynamoDB to Aurora replication)

- Custom applications via AWS SDK

Kinesis Data Streams Integrations

Kinesis Data Streams has broader integration with the AWS ecosystem:

- AWS Lambda

- Kinesis Data Firehose

- Kinesis Data Analytics

- Amazon EMR

- AWS Glue

- Amazon SageMaker

- Amazon CloudWatch Logs

- AWS IoT Core

- Amazon MSK (via Kafka Connect)

- Third-party services via AWS Marketplace

This extensive integration makes Kinesis more versatile for complex data processing pipelines.

Use Cases: When to Choose Each Service

Ideal Use Cases for DynamoDB Streams

DynamoDB Streams is best for:

-

Reacting to database changes:

- Updating search indexes when data changes

- Sending notifications on record updates

- Maintaining derived data in other storage systems

-

Implementing the CQRS pattern:

- Command Query Responsibility Segregation

- Write to DynamoDB, propagate changes to read-optimized stores

-

Cross-region replication:

- While Global Tables is preferred, custom replication is possible

-

Auditing and compliance:

- Capturing all data modifications for audit trails

- Recording who changed what and when

-

Simple event-driven microservices architectures:

- Triggering workflows when data changes

- Service-to-service communication via data

Ideal Use Cases for Kinesis Data Streams

Kinesis Data Streams is better for:

-

High-volume data ingestion:

- Clickstream analysis

- IoT device telemetry

- Log and event data collection

- Financial transaction processing

-

Real-time analytics:

- Gaming telemetry analysis

- Ad tech and marketing analytics

- Real-time dashboards

- Anomaly detection

-

Multiple consumers of the same stream:

- When multiple applications need the same data

- Different processing requirements for the same events

-

Complex stream processing:

- When you need sophisticated stream processing

- Integration with Apache Flink or Spark

- Time-windowed analytics

-

Long retention requirements:

- When data might need to be reprocessed

- Applications that process at different speeds

Familiar with these Dynamodb Challenges ?

- Writing one‑off scripts for simple DynamoDB operations

- Constantly switching between AWS profiles and regions

- Sharing and managing database operations with your team

You should try Dynomate GUI Client for DynamoDB

- Create collections of operations that work together like scripts

- Seamless integration with AWS SSO and profile switching

- Local‑first design with Git‑friendly sharing for team collaboration

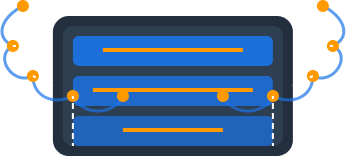

Combining DynamoDB Streams and Kinesis

In many advanced architectures, it makes sense to use both services together:

DynamoDB Streams to Kinesis Data Streams

A common pattern is to capture changes from DynamoDB Streams and forward them to Kinesis Data Streams:

- DynamoDB Streams captures database changes

- Lambda function processes these changes

- Lambda forwards the processed data to Kinesis

- Multiple consumers process the Kinesis stream

This approach provides:

- Database change capture via DynamoDB Streams

- Extended retention through Kinesis

- Multiple processing applications on the same change data

- Integration with services that work with Kinesis but not DynamoDB Streams

Example architecture:

DynamoDB Table → DynamoDB Stream → Lambda → Kinesis Data Stream → Multiple Consumers

↓

Kinesis Firehose → S3/Redshift

↓

Kinesis AnalyticsCost Comparison

DynamoDB Streams Pricing

DynamoDB Streams itself doesn’t incur additional charges, but you pay for:

- Read requests to the stream (in Read Capacity Units)

- Lambda invocations if using Lambda to process the stream

- Any additional AWS resources used in processing

This can make DynamoDB Streams cost-effective for moderate change volumes, especially if you’re already using DynamoDB.

Kinesis Data Streams Pricing

Kinesis Data Streams costs include:

-

Provisioned mode:

- Shard hours ($0.015 per shard hour)

- PUT payload units ($0.014 per million)

- Extended retention (if beyond 24 hours)

- Data retrieval (for enhanced fan-out)

-

On-demand mode:

- Data ingested ($0.08 per GB)

- Data retrieved ($0.05 per GB)

- Extended retention (if applicable)

For high-volume or continuous streams, these costs can be significant, but the flexibility and scalability often justify the expense.

Development and Operational Considerations

DynamoDB Streams Considerations

When working with DynamoDB Streams:

- Shard management: Handled automatically based on table partitioning

- Monitoring: Use CloudWatch metrics like IteratorAge

- Error handling: Implement proper retry logic in Lambda or KCL consumers

- Testing: Use DynamoDB Local for local development

- Limits: Be aware of limits on concurrent Lambda executions per stream

- Ordering: Guaranteed order only within the same partition key

Kinesis Data Streams Considerations

For Kinesis Data Streams:

- Shard management: In provisioned mode, monitor and adjust shard count

- Monitoring: Track metrics like WriteProvisionedThroughputExceeded

- Consumer design: Choose between standard and enhanced fan-out

- Hot shards: Design partition keys to avoid uneven data distribution

- Error handling: Implement checkpointing to handle failures

- Cost optimization: Right-size shards based on actual throughput needs

Tools for Working with Streams

DynamoDB Streams Tools

- AWS Management Console for enabling and monitoring streams

- AWS CLI for stream management

- AWS CloudFormation for infrastructure as code

- AWS Lambda console for function configuration

- CloudWatch for monitoring stream metrics

Kinesis Data Streams Tools

- AWS Management Console for stream management

- AWS CLI for advanced operations

- Kinesis Producer Library (KPL) for efficient data production

- Kinesis Client Library (KCL) for consumer applications

- Kinesis Agent for log file ingestion

- Third-party connectors and tools

For DynamoDB users, Dynomate provides a user-friendly interface for managing and monitoring your DynamoDB tables, including visualization tools that can help you understand your data patterns and optimize your stream processing workflows.

Switching from Dynobase? Try Dynomate

Developers are switching to Dynomate for these key advantages:

Better Multi-Profile Support

- Native AWS SSO integration

- Seamless profile switching

- Multiple accounts in a single view

Developer-Focused Workflow

- Script-like operation collections

- Chain data between operations

- Full AWS API logging for debugging

Team Collaboration

- Git-friendly collection sharing

- No account required for installation

- Local-first data storage for privacy

Privacy & Security

- No account creation required

- 100% local data storage

- No telemetry or usage tracking

Conclusion: Making Your Decision

The choice between DynamoDB Streams and Kinesis Data Streams depends on your specific requirements:

-

Choose DynamoDB Streams when your primary need is to react to changes in DynamoDB tables, when you prefer automatic scaling, and when you’re mainly concerned with processing database events in near real-time.

-

Choose Kinesis Data Streams when you need a general-purpose streaming solution, when you have diverse data sources beyond DynamoDB, when you require longer retention periods, or when you need more sophisticated stream processing capabilities.

-

Use both together when you want to capture DynamoDB changes but need the additional capabilities of Kinesis for downstream processing, retention, or integration with other AWS services.

By understanding the strengths and limitations of each service, you can design an architecture that efficiently meets your real-time data processing needs while optimizing for cost, scalability, and operational complexity.

When working with DynamoDB and its streams, tools like Dynomate can significantly improve your development experience by providing intuitive interfaces for visualizing your data, monitoring performance, and managing your DynamoDB resources more effectively.