DynamoDB vs BigQuery: Understanding the Key Differences

DynamoDB vs BigQuery: Understanding the Key Differences

When exploring database options across cloud providers, you might encounter comparisons between Amazon DynamoDB and Google BigQuery. While both are fully managed cloud data services, they serve fundamentally different purposes and were designed to solve different problems.

This article explores the key differences between DynamoDB and BigQuery, helping you understand when to use each service and how they might complement each other in a modern data architecture.

Overview: Different Services for Different Needs

| Feature | Amazon DynamoDB | Google BigQuery |

|---|---|---|

| Service Type | NoSQL Database | Data Warehouse / Analytics Engine |

| Primary Purpose | Operational database for applications | Analytical processing and data insights |

| Data Model | Key-value and document store | SQL-based columnar storage |

| Latency | Single-digit milliseconds | Seconds to minutes (for queries) |

| Query Language | DynamoDB API, PartiQL (limited SQL) | Standard SQL |

| Best For | Transactional workloads, real-time apps | Big data analytics, BI, reporting |

| Cloud Provider | AWS | Google Cloud |

Understanding the Fundamental Difference

Before diving deeper, it’s critical to understand that DynamoDB and BigQuery were built to solve different problems:

-

DynamoDB is an operational database (OLTP - Online Transaction Processing) designed for applications that need consistent, single-digit millisecond access to individual data items. It excels at serving real-time applications with high transaction volumes.

-

BigQuery is an analytical data warehouse (OLAP - Online Analytical Processing) designed for analyzing large datasets using SQL. It excels at complex queries across terabytes or petabytes of data, with query results in seconds to minutes.

This fundamental difference drives the design choices, performance characteristics, and use cases for each service.

Data Ingestion and Structure

DynamoDB Data Ingestion

DynamoDB ingests data through individual operations:

- PutItem, UpdateItem, BatchWriteItem operations

- One record at a time or in small batches

- Optimized for high-frequency, small transactions

- Data is immediately accessible after write

- No complex ETL processes required

BigQuery Data Ingestion

BigQuery is designed for batch or streaming data loads:

- Bulk loads from files (CSV, JSON, Avro, Parquet)

- Streaming inserts for near real-time analytics

- Regular data pipelines from various sources

- ETL processes to transform and prepare data

- Loading from Google Cloud Storage or direct sources

Data Model and Query Capabilities

DynamoDB Data Model

DynamoDB uses a key-value and document data model:

- Tables with items (similar to rows)

- Primary key required (partition key, optional sort key)

- No joins between tables

- No enforced schema beyond primary key

- Limited query patterns (primarily key-based access)

- Maximum item size of 400KB

DynamoDB queries are optimized for specific access patterns:

// Example DynamoDB API call

GetItem({

TableName: "Users",

Key: {

"UserId": "user123"

}

})Or using PartiQL:

SELECT * FROM Users WHERE UserId = 'user123'But these are limited compared to full SQL capabilities.

BigQuery Data Model

BigQuery uses a SQL-compatible columnar data model:

- Tables with rows and columns

- Structured schema with defined data types

- Support for nested and repeated fields

- No primary key requirement

- Full SQL query capabilities including joins, aggregations

- Support for terabytes or petabytes of data

BigQuery allows complex analytical SQL:

SELECT

date,

country,

SUM(revenue) as total_revenue,

COUNT(DISTINCT user_id) as unique_users

FROM ecommerce_data

WHERE date BETWEEN '2024-01-01' AND '2024-03-01'

GROUP BY date, country

HAVING total_revenue > 10000

ORDER BY total_revenue DESCThis flexibility for complex analysis is BigQuery’s key strength.

Dynomate: Modern DynamoDB GUI Client

Built for real developer workflows with AWS profile integration, multi-session support, and team collaboration.

No account needed. Install and start using immediately.

- Table browsing across regions

- Flexible query & scan interface

- AWS API logging & debugging

Performance Characteristics

DynamoDB Performance

DynamoDB is optimized for low-latency operations:

- Single-digit millisecond response times

- Consistent performance regardless of data size

- Scales horizontally for throughput

- Performance tied to capacity units

- Optimized for point lookups and simple range queries

- Not designed for complex analytics or aggregations

BigQuery Performance

BigQuery is optimized for query throughput on large datasets:

- Query latency measured in seconds to minutes

- Massively parallel processing of large datasets

- Performance generally independent of dataset size

- Not optimized for single-record lookups

- Excellent for complex aggregations and joins

- Auto-scaling computational resources

Use Cases: When to Choose Each Service

Ideal DynamoDB Use Cases

DynamoDB excels at:

- Web and mobile backends: Serving user profiles, session data, product information

- Real-time applications: Gaming, IoT, trading platforms that need consistent low latency

- Microservices data storage: Each service with its own DynamoDB tables

- High-throughput transactional systems: Order processing, inventory management

- Session stores: Web/mobile application session management

- Leaderboards and activity feeds: Timeline data with key-based access

Ideal BigQuery Use Cases

BigQuery excels at:

- Data warehousing: Centralized repository for business data

- Business intelligence: Dashboards, reports, data exploration

- Large-scale data analysis: Processing terabytes or petabytes of data

- Ad-hoc analysis: Data scientists exploring patterns and insights

- Machine learning feature preparation: Creating features from historical data

- Log analysis: Processing and analyzing application logs at scale

Cost Model Comparison

DynamoDB Pricing

DynamoDB pricing is based on:

- Read/write capacity: Provisioned or on-demand

- Storage: Per GB-month

- Optional features: Global tables, backups, streams

- Data transfer: Out of AWS

DynamoDB can be very cost-effective for applications with predictable workloads, especially when using provisioned capacity with auto-scaling. The ability to scale to zero with on-demand capacity is beneficial for development or variable workloads.

BigQuery Pricing

BigQuery pricing is based on:

- Storage: Per GB-month for data stored

- Query processing: Per TB of data processed by queries

- Optional flat-rate pricing: Dedicated slots for predictable costs

- Streaming inserts: Additional costs for real-time data

BigQuery can be cost-effective for analytics workloads, especially when queries are optimized to scan only necessary data. The separation of storage and compute costs allows for storing large datasets economically while only paying for active analysis.

Familiar with these Dynamodb Challenges ?

- Writing one‑off scripts for simple DynamoDB operations

- Constantly switching between AWS profiles and regions

- Sharing and managing database operations with your team

You should try Dynomate GUI Client for DynamoDB

- Create collections of operations that work together like scripts

- Seamless integration with AWS SSO and profile switching

- Local‑first design with Git‑friendly sharing for team collaboration

Cross-Cloud Considerations

Since these services exist on different cloud platforms, several factors might influence your decision:

- Existing cloud infrastructure: If already committed to AWS or GCP

- Multi-cloud strategy: Whether you’re pursuing cloud diversity

- Team expertise: Familiarity with specific cloud platforms

- Compliance requirements: Data residency or regulatory needs

- Contract commitments: Existing enterprise agreements

Using DynamoDB and BigQuery Together

For organizations using both AWS and Google Cloud, these services can complement each other in a modern data architecture:

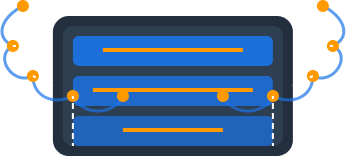

Data Flow from DynamoDB to BigQuery

A common pattern involves:

- Capture changes from DynamoDB using DynamoDB Streams

- Process changes with AWS Lambda

- Export data to CSV/JSON in Amazon S3

- Transfer to Google Cloud Storage using scheduled jobs

- Load into BigQuery for analysis

This approach leverages DynamoDB’s strengths for operational data and BigQuery’s analytics capabilities. Organizations with multi-cloud strategies can maintain transactional systems in AWS while centralizing analytics in Google Cloud.

AWS Alternatives to BigQuery

If you’re committed to AWS, consider these alternatives to BigQuery:

- Amazon Redshift: AWS’s data warehouse solution

- Amazon Athena: SQL queries against data in S3

- AWS Glue: ETL service for preparing data

- Amazon QuickSight: Business intelligence and visualization

For more information on AWS analytics options, see our comparison of DynamoDB vs Redshift.

Tools for Management and Development

DynamoDB Tools

AWS provides several tools for working with DynamoDB:

- AWS Management Console

- AWS CLI and SDKs

- NoSQL Workbench for DynamoDB

Third-party tools like Dynomate provide enhanced capabilities for:

- Visual query building

- Data visualization and exploration

- Multi-account management

- Performance optimization

- Table design and modeling

BigQuery Tools

Google provides comprehensive tools for BigQuery:

- Google Cloud Console

- BigQuery web UI

- bq command-line tool

- Client libraries in multiple languages

- Connected sheets (BigQuery in Google Sheets)

- Looker and Looker Studio for visualization

Migration Considerations

From DynamoDB to BigQuery

When migrating data from DynamoDB to BigQuery for analytics:

- Consider how to transform DynamoDB’s NoSQL structure to BigQuery’s columnar format

- Design a schema that optimizes for query performance

- Plan for ongoing synchronization if needed

- Deal with DynamoDB’s item size limits vs BigQuery’s row size freedom

From BigQuery to DynamoDB

This migration direction is less common but might occur when operationalizing analytics results:

- Identify specific access patterns needed for the application

- Design DynamoDB keys based on these patterns

- Consider DynamoDB’s 400KB item size limit

- Potentially denormalize or partition data differently

Switching from Dynobase? Try Dynomate

Developers are switching to Dynomate for these key advantages:

Better Multi-Profile Support

- Native AWS SSO integration

- Seamless profile switching

- Multiple accounts in a single view

Developer-Focused Workflow

- Script-like operation collections

- Chain data between operations

- Full AWS API logging for debugging

Team Collaboration

- Git-friendly collection sharing

- No account required for installation

- Local-first data storage for privacy

Privacy & Security

- No account creation required

- 100% local data storage

- No telemetry or usage tracking

Conclusion: Choosing the Right Tool for the Job

The comparison between DynamoDB and BigQuery highlights that they serve fundamentally different purposes:

-

Choose DynamoDB when you need a high-performance, scalable NoSQL database for your applications that require consistent low-latency access to individual items. If you’re building web applications, mobile backends, or microservices on AWS, DynamoDB provides the operational database capabilities you need.

-

Choose BigQuery when you need to analyze large datasets, run complex queries, generate reports, or extract insights from historical data. If your primary goal is data warehousing and analytics, especially on Google Cloud, BigQuery’s powerful query engine and scalable architecture make it the better choice.

-

Consider using both in a complementary fashion if you have a multi-cloud strategy or need both operational and analytical capabilities. DynamoDB can serve your applications while periodically exporting data to BigQuery for in-depth analysis.

By understanding the strengths and proper use cases for each service, you can design a data architecture that leverages the right tool for each specific requirement in your application ecosystem.

For teams working with DynamoDB, specialized tools like Dynomate can significantly improve productivity by providing intuitive interfaces for data management, visualization, and performance optimization.