DynamoDB vs PostgreSQL: In-depth Comparison for 2025

DynamoDB vs PostgreSQL: Complete Comparison Guide for 2025

When choosing a database for your application, the decision between Amazon DynamoDB, a fully managed NoSQL service, and PostgreSQL, a powerful open-source relational database, can significantly impact your development approach, application performance, and operational overhead. This comprehensive guide explores the key differences, strengths, and trade-offs of each database to help you make an informed choice for your specific needs.

Table of Contents

- Introduction: Understanding DynamoDB and PostgreSQL

- Core Architecture and Data Model

- Scalability and Performance

- Query Capabilities and Flexibility

- Schema Evolution and Flexibility

- ACID Compliance and Transactions

- Hosting Options and Operational Overhead

- Scalability Patterns

- Costs and Pricing Models

- Development Experience

- Ecosystem and Integration

- Security Features

- When to Choose DynamoDB

- When to Choose PostgreSQL

- Hybrid Approaches

- Migration Considerations

- Conclusion

Introduction: Understanding DynamoDB and PostgreSQL

What is Amazon DynamoDB?

Amazon DynamoDB is a fully managed, serverless NoSQL database service provided by AWS. It offers seamless scalability with predictable performance at any scale. Launched in 2012, DynamoDB was designed to address the limitations of traditional databases for high-scale web applications. It uses a key-value and document data model and automatically manages the underlying infrastructure, partitioning, and replication.

What is PostgreSQL?

PostgreSQL (often called “Postgres”) is a powerful, open-source object-relational database system with over 30 years of active development. It emphasizes extensibility and SQL compliance. PostgreSQL is known for its proven architecture, reliability, data integrity, robust feature set, and extensibility. It supports both relational (SQL) and non-relational (JSON, hstore) data models.

Key Philosophical Differences

| Aspect | DynamoDB | PostgreSQL |

|---|---|---|

| Design Philosophy | Purpose-built for scale and simplicity | General-purpose with extensibility |

| Primary Data Model | NoSQL: Key-value and document | Relational with object-oriented features |

| Consistency Model | Customizable (eventual or strong) | ACID-compliant by default |

| Scaling Approach | Horizontal, managed partitioning | Primarily vertical with some horizontal options |

| Management Model | Fully managed, serverless | Self-managed or managed service (e.g., RDS) |

| Query Language | Limited API operations | Full SQL with procedural languages |

Core Architecture and Data Model

DynamoDB’s Architecture and Data Model

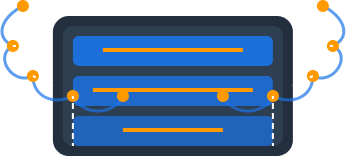

DynamoDB uses a distributed architecture with data automatically partitioned across multiple servers:

- Tables: Collections of items with a required primary key

- Items: Individual records (similar to rows) with a flexible set of attributes

- Attributes: Name-value pairs that can be scalar, document, or set types

- Primary Key: Simple (partition key only) or composite (partition key + sort key)

- Secondary Indexes: Global (GSI) and Local (LSI) indexes for alternate access patterns

- Partitioning: Automatic, based on partition key hash

DynamoDB’s schema-less nature means:

- Only primary key attributes are required

- Different items in the same table can have different attributes

- New attributes can be added to any item at any time

- Maximum item size is 400KB

Example DynamoDB Item:

{

"UserID": "U12345",

"Username": "johndoe",

"Email": "john@example.com",

"SignupDate": 1615420800,

"LastLoginDate": 1625097600,

"Preferences": {

"Theme": "Dark",

"EmailNotifications": true,

"MobileNotifications": false

},

"Roles": ["User", "Editor"],

"PostCount": 42,

"IsActive": true

}PostgreSQL’s Architecture and Data Model

PostgreSQL follows a traditional client-server relational database architecture:

- Databases: Collections of schemas

- Schemas: Collections of tables, functions, and data types

- Tables: Collections of rows with a predefined structure

- Rows: Individual records with a fixed set of columns

- Columns: Named attributes with specified data types

- Constraints: Rules enforcing data integrity (primary keys, foreign keys, etc.)

- Indexes: B-tree, Hash, GiST, SP-GiST, GIN, and BRIN for query optimization

PostgreSQL’s relational model requires:

- Tables with predefined schema

- Data normalization (typically)

- Explicit schema changes for structural modifications

- Relationships between tables via foreign keys

Example PostgreSQL Schema:

CREATE TABLE users (

user_id VARCHAR(10) PRIMARY KEY,

username VARCHAR(50) UNIQUE NOT NULL,

email VARCHAR(255) UNIQUE NOT NULL,

signup_date TIMESTAMP NOT NULL,

last_login_date TIMESTAMP,

post_count INTEGER DEFAULT 0,

is_active BOOLEAN DEFAULT TRUE

);

CREATE TABLE user_preferences (

user_id VARCHAR(10) REFERENCES users(user_id),

theme VARCHAR(50) DEFAULT 'Light',

email_notifications BOOLEAN DEFAULT TRUE,

mobile_notifications BOOLEAN DEFAULT FALSE,

PRIMARY KEY (user_id)

);

CREATE TABLE user_roles (

user_id VARCHAR(10) REFERENCES users(user_id),

role VARCHAR(50) NOT NULL,

PRIMARY KEY (user_id, role)

);JSON Capabilities in PostgreSQL

A notable feature of PostgreSQL is its robust JSON support, which allows it to work with document data alongside relational data:

- JSONB Data Type: Binary JSON format with indexing support

- JSON Operations: Rich set of operators and functions for JSON manipulation

- JSON Indexing: GIN indexes on JSONB for efficient querying

- JSON Path Expressions: SQL/JSON path language for JSON querying

This gives PostgreSQL some document database capabilities while maintaining its relational foundations.

Example PostgreSQL JSON Usage:

-- Store JSON directly in a table

CREATE TABLE user_profiles (

user_id VARCHAR(10) PRIMARY KEY,

profile JSONB NOT NULL

);

-- Insert document data

INSERT INTO user_profiles VALUES (

'U12345',

'{

"username": "johndoe",

"preferences": {

"theme": "Dark",

"notifications": true

},

"roles": ["User", "Editor"]

}'

);

-- Query JSON data

SELECT user_id

FROM user_profiles

WHERE profile->'preferences'->>'theme' = 'Dark'

AND 'Editor' = ANY(SELECT jsonb_array_elements_text(profile->'roles'));Scalability and Performance

DynamoDB Scalability

DynamoDB is designed for virtually unlimited scalability with consistent performance:

- Automatic Partitioning: Data automatically distributed across partitions

- Horizontal Scaling: Add capacity without downtime or performance impact

- Throughput Control: Specify read and write capacity or use on-demand scaling

- Performance Isolation: One table’s traffic doesn’t affect others

- Global Tables: Multi-region replication for global distribution

- Adaptive Capacity: Automatically adapts to workload patterns

Performance characteristics:

- Single-digit millisecond response times at any scale

- Ability to handle millions of requests per second

- No degradation as data volume grows

- Consistent performance during scaling events

PostgreSQL Scalability

PostgreSQL scaling traditionally focuses on vertical scaling but offers several options for horizontal scaling:

- Vertical Scaling: Larger instances with more CPU, memory, and storage

- Read Replicas: Distribute read traffic across multiple read-only instances

- Connection Pooling: Efficiently manage database connections

- Partitioning: Split large tables into smaller physical pieces

- Sharding: Manually distribute data across multiple PostgreSQL instances

- Citus Extension: Distributed PostgreSQL for horizontal scaling (now part of Azure)

Performance characteristics:

- Very fast for relational operations on properly indexed data

- Performance depends on server resources, configuration, and query optimization

- Can handle thousands of transactions per second on properly sized hardware

- Performance may degrade with very large datasets or complex queries without optimization

Performance Comparison

| Workload Type | DynamoDB | PostgreSQL |

|---|---|---|

| Simple Key Lookups | Extremely fast (ms) | Fast with proper indexing |

| Range Queries | Fast on sort key | Fast with proper indexing |

| Complex Joins | Not supported natively | Highly optimized |

| Aggregations | Limited support | Highly optimized |

| Write-heavy | Excellent | Good (depends on indexing overhead) |

| Mixed Workloads | Consistent performance | Varies based on query mix |

| Very Large Datasets | Maintains performance | May require partitioning |

| Global Distribution | Native with Global Tables | Requires custom replication |

Query Capabilities and Flexibility

The query capabilities of these databases represent one of their most significant differences.

DynamoDB Query Capabilities

DynamoDB offers a limited but focused set of operations:

- GetItem: Retrieve a single item by primary key

- Query: Find items with the same partition key, filter on sort key

- Scan: Examine every item in a table (expensive for large tables)

- PutItem/UpdateItem/DeleteItem: Item-level write operations

- BatchGetItem/BatchWriteItem: Multi-item operations

- TransactGetItems/TransactWriteItems: Multi-item transactions

- PartiQL: Limited SQL-compatible query language (added in 2020)

Query constraints:

- Queries must use the primary key or an index

- No native joins across tables

- Limited filtering capabilities on non-key attributes

- No built-in aggregation functions

Example DynamoDB Query (JavaScript/Node.js):

const params = {

TableName: 'UserPosts',

KeyConditionExpression: 'UserID = :userId AND PostDate > :startDate',

FilterExpression: 'contains(Tags, :tagValue)',

ExpressionAttributeValues: {

':userId': 'U12345',

':startDate': '2024-01-01',

':tagValue': 'AWS'

},

Limit: 10

};

dynamodb.query(params, (err, data) => {

if (err) console.error(err);

else console.log(data.Items);

});PostgreSQL Query Capabilities

PostgreSQL provides comprehensive SQL support with advanced features:

- Full SQL: Complete SQL:2016 support with extensions

- Complex Joins: Inner, outer, cross, natural, self joins

- Subqueries: Nested queries, correlated subqueries

- Common Table Expressions (CTEs): Recursive queries

- Window Functions: Analytical functions over result sets

- Aggregations: Standard and custom aggregate functions

- Full-text Search: Built-in text search capabilities

- Geospatial Queries: PostGIS extension for spatial data

- Procedural Languages: PL/pgSQL, PL/Python, PL/Perl, etc.

- User-defined Functions: Custom functions in multiple languages

- Stored Procedures: Server-side application logic

- Triggers: Automated responses to database events

- Views and Materialized Views: Virtual tables and cached query results

Example PostgreSQL Query:

-- Find users who have posted articles tagged with 'AWS' in 2024,

-- along with their post count and average engagement

WITH user_posts AS (

SELECT

u.user_id,

u.username,

COUNT(p.post_id) AS post_count,

AVG(p.views) AS avg_views

FROM

users u

JOIN posts p ON u.user_id = p.user_id

JOIN post_tags pt ON p.post_id = pt.post_id

JOIN tags t ON pt.tag_id = t.tag_id

WHERE

t.name = 'AWS'

AND p.post_date >= '2024-01-01'

GROUP BY

u.user_id, u.username

)

SELECT

up.*,

RANK() OVER (ORDER BY up.avg_views DESC) AS engagement_rank

FROM

user_posts up

WHERE

up.post_count >= 5

ORDER BY

engagement_rank;Query Flexibility Comparison

The difference in query capabilities significantly impacts application design:

| Aspect | DynamoDB | PostgreSQL |

|---|---|---|

| Query Language | Limited API with some SQL (PartiQL) | Full SQL with extensions |

| Join Operations | No native joins | Comprehensive join types |

| Filtering | Limited to key conditions & filters | Rich WHERE clause capabilities |

| Aggregations | Basic (client-side) | Comprehensive built-in functions |

| Analytical Queries | Limited support | Window functions, CTEs, etc. |

| Full-text Search | No native support | Built-in text search |

| Spatial Queries | No native support | PostGIS extension |

| Query Optimization | Simple key-based | Complex query planner & optimizer |

| Ad-hoc Queries | Limited flexibility | Highly flexible |

Schema Evolution and Flexibility

DynamoDB Schema Flexibility

DynamoDB offers extreme schema flexibility:

- Schema-less Design: Only primary key attributes are required

- On-the-fly Attribute Addition: Add new attributes to any item anytime

- Heterogeneous Items: Different items can have different attributes

- No Migration Required: Evolve your data model without downtime

- Sparse Attributes: Attributes can exist on some items but not others

This flexibility allows:

- Rapid application changes without database modifications

- Different item types in the same table (single-table design)

- Progressive enhancement of data models

- Easy handling of optional attributes

Example of DynamoDB Schema Evolution:

Simply start including new attributes in your write operations:

// Original data model

const originalItem = {

UserID: 'U12345',

Username: 'johndoe',

Email: 'john@example.com'

};

// Updated data model (no schema change required)

const updatedItem = {

UserID: 'U12345',

Username: 'johndoe',

Email: 'john@example.com',

PhoneNumber: '+1234567890', // New attribute

VerificationStatus: 'Verified', // New attribute

SecuritySettings: { // New nested attribute

TwoFactorEnabled: true,

LastPasswordChange: '2024-03-15'

}

};PostgreSQL Schema Evolution

PostgreSQL requires explicit schema changes but offers tools to manage them:

- ALTER TABLE: Add, modify, or drop columns

- Schema Migrations: Tools like Flyway, Liquibase, or custom scripts

- Transactional DDL: Schema changes within transactions

- Zero-downtime Migrations: Techniques for schema changes without downtime

- Flexible Columns: JSONB for semi-structured data

While less flexible than DynamoDB, PostgreSQL offers:

- Schema enforcement for data consistency

- Clear documentation of data structure

- Explicit versioning of database schema

- Type checking and validation

Example of PostgreSQL Schema Evolution:

-- Original schema

CREATE TABLE users (

user_id VARCHAR(10) PRIMARY KEY,

username VARCHAR(50) UNIQUE NOT NULL,

email VARCHAR(255) UNIQUE NOT NULL

);

-- Schema migration

BEGIN;

-- Add new columns

ALTER TABLE users ADD COLUMN phone_number VARCHAR(20);

ALTER TABLE users ADD COLUMN verification_status VARCHAR(20);

-- Add new table for security settings

CREATE TABLE user_security_settings (

user_id VARCHAR(10) PRIMARY KEY REFERENCES users(user_id),

two_factor_enabled BOOLEAN DEFAULT FALSE,

last_password_change DATE

);

COMMIT;Flexible Schema Approaches in PostgreSQL

PostgreSQL can mimic some of DynamoDB’s flexibility using JSONB:

-- Hybrid approach with structured and semi-structured data

CREATE TABLE users (

user_id VARCHAR(10) PRIMARY KEY,

username VARCHAR(50) UNIQUE NOT NULL,

email VARCHAR(255) UNIQUE NOT NULL,

-- Core fields as columns

created_at TIMESTAMP NOT NULL DEFAULT NOW(),

-- Flexible attributes as JSONB

attributes JSONB DEFAULT '{}'::jsonb

);

-- Add data with flexible schema

INSERT INTO users (user_id, username, email, attributes)

VALUES (

'U12345',

'johndoe',

'john@example.com',

'{

"phoneNumber": "+1234567890",

"preferences": {

"theme": "Dark",

"language": "en-US"

},

"devices": [

{"id": "d1", "type": "mobile", "lastSeen": "2024-03-10"},

{"id": "d2", "type": "tablet", "lastSeen": "2024-02-25"}

]

}'

);

-- Query against JSONB fields

SELECT

user_id,

username,

attributes->>'phoneNumber' AS phone,

attributes->'preferences'->>'theme' AS theme

FROM

users

WHERE

attributes->'preferences'->>'language' = 'en-US';ACID Compliance and Transactions

DynamoDB Transactions

DynamoDB added transaction support in 2018 with some limitations:

- Atomic Operations: All-or-nothing transactions

- Item-level Transactions: Operations on up to 25 items or 4MB of data

- Cross-Table Transactions: Support for items across different tables

- Optimistic Concurrency Control: Based on conditional writes

- No Isolation Levels: Fixed isolation behavior

- Regional Scope: Transactions limited to a single AWS region

Example DynamoDB Transaction:

const params = {

TransactItems: [

{

Put: {

TableName: 'Orders',

Item: {

OrderID: 'O1234',

CustomerID: 'C5678',

Amount: 99.95,

Status: 'Pending'

},

ConditionExpression: 'attribute_not_exists(OrderID)'

}

},

{

Update: {

TableName: 'Customers',

Key: { CustomerID: 'C5678' },

UpdateExpression: 'SET OrderCount = OrderCount + :inc',

ExpressionAttributeValues: { ':inc': 1 }

}

},

{

Update: {

TableName: 'Inventory',

Key: { ProductID: 'P9012' },

UpdateExpression: 'SET Stock = Stock - :qty',

ConditionExpression: 'Stock >= :qty',

ExpressionAttributeValues: { ':qty': 1 }

}

}

]

};

dynamodb.transactWriteItems(params, (err, data) => {

if (err) console.error(err);

else console.log('Transaction completed successfully');

});PostgreSQL Transactions

PostgreSQL provides comprehensive ACID transaction support:

- Full ACID Compliance: Atomicity, Consistency, Isolation, Durability

- Isolation Levels: Read uncommitted, Read committed, Repeatable read, Serializable

- Explicit Transaction Control: BEGIN, COMMIT, ROLLBACK

- Savepoints: Partial rollback within transactions

- Two-Phase Commit: For distributed transactions

- Advisory Locks: Application-level locking

- Row-Level Locking: Various lock modes for concurrency control

- MVCC: Multi-Version Concurrency Control for non-blocking reads

Example PostgreSQL Transaction:

BEGIN;

-- Create new order

INSERT INTO orders (order_id, customer_id, amount, status)

VALUES ('O1234', 'C5678', 99.95, 'Pending');

-- Update customer stats

UPDATE customers

SET order_count = order_count + 1

WHERE customer_id = 'C5678';

-- Update inventory

UPDATE inventory

SET stock = stock - 1

WHERE product_id = 'P9012';

-- Check if inventory update succeeded (stock was sufficient)

-- If not, this will be 0 and we'll roll back

IF NOT FOUND THEN

ROLLBACK;

RAISE EXCEPTION 'Insufficient stock for product P9012';

END IF;

-- Everything succeeded, commit the transaction

COMMIT;Transaction Capabilities Comparison

| Feature | DynamoDB | PostgreSQL |

|---|---|---|

| ACID Guarantees | Limited but present | Comprehensive |

| Transaction Size | Max 25 items/4MB | Limited by resources |

| Cross-Entity Transactions | Up to 25 items | Unlimited |

| Isolation Levels | Not configurable | Four levels |

| Savepoints | Not supported | Supported |

| Distributed Transactions | Regional only | Two-phase commit |

| Concurrency Control | Optimistic | MVCC + locks |

| Transaction Cost | 2x normal operations | Included |

Hosting Options and Operational Overhead

DynamoDB Hosting and Operations

As a fully managed service, DynamoDB significantly reduces operational overhead:

- Fully Managed: No servers to provision or maintain

- Serverless: Pay only for what you use, no capacity planning required

- Zero Administration: No software to install, patch, or upgrade

- Automatic Scaling: Handles traffic spikes automatically

- Multi-AZ Replication: Built-in high availability

- Automated Backups: Point-in-time recovery up to 35 days

- Monitoring: Integrated with CloudWatch metrics

- Global Tables: One-click multi-region deployment

Operational tasks reduced to:

- Capacity management (if using provisioned capacity)

- Cost optimization

- Access control via IAM

- Backup and recovery planning

PostgreSQL Hosting and Operations

PostgreSQL can be self-hosted or run as a managed service:

Self-hosted Options:

- On-premises hardware

- EC2 or other cloud VMs

- Kubernetes with operators

- Docker containers

Managed Service Options:

- Amazon RDS for PostgreSQL

- Amazon Aurora PostgreSQL-compatible

- Azure Database for PostgreSQL

- Google Cloud SQL for PostgreSQL

- Digital Ocean Managed PostgreSQL

- Many other cloud providers

Operational Responsibilities (self-hosted):

- Server provisioning and sizing

- PostgreSQL installation and configuration

- Replica configuration for high availability

- Backup and recovery planning

- Software patching and upgrades

- Performance tuning and optimization

- Security hardening

- Monitoring and alerting

- Scaling planning and execution

Operational Responsibilities (managed service):

- Instance sizing and configuration

- Connection management

- Performance tuning

- Monitoring and optimization

- Backup strategy

- Scaling planning

Operational Overhead Comparison

| Aspect | DynamoDB | Self-hosted PostgreSQL | Managed PostgreSQL |

|---|---|---|---|

| Server Management | None | Full responsibility | Minimal |

| Software Updates | Automatic | Manual | Mostly automatic |

| Scaling | Automatic | Manual | Semi-automatic |

| High Availability | Built-in | Manual setup | Usually built-in |

| Backups | Automatic | Manual setup | Automatic |

| Performance Tuning | Limited options | Full control | Moderate control |

| Monitoring | Built-in | Manual setup | Built-in |

| Global Distribution | Built-in | Custom solution | Limited |

| Team Skills Required | AWS knowledge | DBA expertise | Mixed |

Scalability Patterns

DynamoDB Scalability Patterns

DynamoDB’s built-in scalability requires specific design patterns:

- Partition Key Design: Choose high-cardinality keys for even distribution

- Write Sharding: Distribute hot keys across partitions

- GSI Overloading: Use the same GSI for multiple access patterns

- Time-Series Data: Use time period in partition key for even distribution

- Sparse Indexes: Create selective indexes for specific queries

- Materialized Aggregates: Pre-compute and store aggregated values

- DAX Caching: Use DynamoDB Accelerator for read scaling

PostgreSQL Scalability Patterns

PostgreSQL scalability typically involves multiple techniques:

- Connection Pooling: PgBouncer, Pgpool-II to manage connections

- Read Replicas: Distribute read traffic across replica instances

- Table Partitioning: Split large tables into smaller physical sections

- Materialized Views: Cache expensive query results

- Query Optimization: Indexes, query rewriting, and explain analysis

- Vertical Scaling: Larger instances with more resources

- Horizontal Scaling: Options include:

- Citus extension for distributed PostgreSQL

- Manual sharding at the application level

- Foreign data wrappers for federated queries

- Logical replication for custom topologies

Scaling Comparison for Common Scenarios

| Scenario | DynamoDB Approach | PostgreSQL Approach |

|---|---|---|

| High Read Volume | DAX caching, GSIs | Read replicas, connection pooling |

| High Write Volume | Partition key distribution | Write optimization, batch inserts |

| Large Data Volume | Automatic partitioning | Table partitioning |

| Complex Analytics | Export to specialized service | Materialized views, parallel query |

| Global Users | Global Tables | Custom replication or managed global database |

Costs and Pricing Models

DynamoDB Pricing Model

DynamoDB’s pricing includes several components:

-

Read/Write Capacity:

- Provisioned: Pay for allocated capacity

- On-demand: Pay per request

- Reserved: Discounted rate for 1 or A3-year commitment

-

Storage: Pay per GB-month

-

Additional Costs:

- Data transfer out

- Streams read requests

- Global Tables replication

- Backups and restores

- DAX (if used)

-

Free Tier:

- 25 WCUs and 25 RCUs

- 25 GB storage

Sample Cost Scenarios:

-

Small Application:

- 5 RCU, 5 WCU, 10GB storage

- Est. Monthly Cost: $10-15

-

Medium Application:

- 100 RCU, 50 WCU, 50GB storage

- Est. Monthly Cost: $100-150

-

Large Application:

- 1000 RCU, 500 WCU, 500GB storage

- Est. Monthly Cost: $1000-1500

PostgreSQL Pricing Model

PostgreSQL pricing varies based on hosting approach:

Self-hosted Costs:

- Server/VM infrastructure

- Storage (local or networked)

- Network data transfer

- Backup storage

- Monitoring tools

- Staff expertise (DBA time)

Managed Service Costs (e.g., RDS):

- Instance type and size

- Storage size and type

- Performance characteristics (IOPS)

- Multi-AZ deployment

- Read replicas

- Backup retention

- Data transfer

Sample Cost Scenarios (using RDS for PostgreSQL):

-

Small Application:

- db.t3.micro, 20GB storage, single-AZ

- Est. Monthly Cost: $15-25

-

Medium Application:

- db.m5.large, 100GB storage, multi-AZ

- Est. Monthly Cost: $250-350

-

Large Application:

- db.r5.2xlarge, 500GB storage, multi-AZ, 1 read replica

- Est. Monthly Cost: $1500-2000

Cost Comparison Factors

| Factor | DynamoDB | PostgreSQL |

|---|---|---|

| Predictability | Variable with usage | More predictable |

| Idle Costs | Low with on-demand | Same as active (except Aurora Serverless) |

| Scaling Costs | Linear with capacity | Step function with instance sizes |

| Storage Costs | ~$0.25/GB-month | $0.10-0.20/GB-month (depends on type) |

| Admin Overhead | Minimal | Higher (especially self-hosted) |

| Developer Time | Potentially higher due to modeling constraints | Lower for familiar SQL patterns |

Development Experience

Familiar with these Dynamodb Challenges ?

- Writing one‑off scripts for simple DynamoDB operations

- Constantly switching between AWS profiles and regions

- Sharing and managing database operations with your team

You should try Dynomate GUI Client for DynamoDB

- Create collections of operations that work together like scripts

- Seamless integration with AWS SSO and profile switching

- Local‑first design with Git‑friendly sharing for team collaboration

DynamoDB Developer Experience

Developing with DynamoDB has specific characteristics:

- API-based Access: Uses AWS SDK instead of connection strings

- NoSQL Modeling: Requires NoSQL data modeling expertise

- Access Pattern Driven: Design starts with query patterns

- Single-table Design: Often uses a single table for multiple entity types

- Denormalization: Duplicates data for query efficiency

- Local Development: DynamoDB Local for offline development

- Limited Tools: Fewer third-party tools compared to PostgreSQL

- AWS Integration: Seamless integration with AWS services

Example Development Workflow:

- Identify access patterns (e.g., “get user by ID”, “find orders by user and date”)

- Design table structure and key schema

- Implement using AWS SDK

- Test locally with DynamoDB Local

- Deploy and test in AWS environment

PostgreSQL Developer Experience

PostgreSQL offers a familiar development experience:

- SQL Interface: Standard SQL for queries and schema

- Rich Tooling: pgAdmin, DataGrip, DBeaver, etc.

- Strong Ecosystem: Extensive libraries, guides, and community support

- Relational Modeling: Familiar entity-relationship modeling

- Normalization: Structured approach to data modeling

- Migrations: Well-established migration tools and patterns

- Local Development: Easy local installation and containerization

- Query Optimization: Visual EXPLAIN and query analysis

Example Development Workflow:

- Design entity-relationship model

- Create database schema (tables, relationships, constraints)

- Implement data access layer with SQL or ORM

- Optimize queries using EXPLAIN and indexes

- Set up migrations for schema evolution

- Deploy to production environment

Development Trade-offs

| Aspect | DynamoDB | PostgreSQL |

|---|---|---|

| Learning Curve | Steeper for SQL developers | Familiar for SQL developers |

| Query Flexibility | Limited | Highly flexible |

| Schema Migrations | Minimal to none | Explicit process |

| Local Development | DynamoDB Local | Easy containerization |

| ORM Support | Limited | Extensive |

| Community Resources | Growing | Vast |

| Testing | More application logic | Database features assist |

| Debugging | Limited tools | Extensive tools |

Ecosystem and Integration

DynamoDB Ecosystem

DynamoDB integrates closely with AWS services:

- AWS Lambda: Serverless compute with direct integration

- AWS AppSync: GraphQL APIs with DynamoDB data source

- Amazon Cognito: User authentication and fine-grained access

- AWS IAM: Identity and access management

- Amazon S3: Store large objects referenced in DynamoDB

- AWS Glue: ETL operations for analytics

- Amazon Athena: Query DynamoDB exports in S3

- AWS Amplify: Simplified client integration

- DynamoDB Streams: Change data capture

- AWS Step Functions: Orchestration with DynamoDB operations

- Amazon CloudWatch: Monitoring and alarms

- AWS Backup: Centralized backup management

PostgreSQL Ecosystem

PostgreSQL has a rich ecosystem of extensions and tools:

-

Extensions:

- PostGIS: Spatial and geographic objects

- pgVector: Vector similarity search

- TimescaleDB: Time-series data

- pg_stat_statements: Query performance monitoring

- HyperLogLog: Approximate distinct counting

- Many others (~100 in standard distribution)

-

Tools and Integrations:

- ORM libraries in all major languages

- Connection pools (PgBouncer, Pgpool-II)

- Monitoring tools (pg_stat_statements, pganalyze)

- Backup tools (pg_dump, Barman, WAL-E/WAL-G)

- Replication tools (logical replication, Patroni)

- Migration tools (Flyway, Liquibase, Sqitch)

-

Cloud Service Integration:

- Most major cloud providers offer PostgreSQL services

- Serverless options (Aurora Serverless, CockroachDB)

- Analytics platforms with PostgreSQL connectors

Integration Comparison

| Integration Scenario | DynamoDB | PostgreSQL |

|---|---|---|

| Serverless Applications | Native integration | Requires connection management |

| Mobile/Web Backends | AWS Amplify, AppSync | Any web framework |

| Data Warehousing | Export to S3/Redshift | Direct connection to BI tools |

| Microservices | Event-driven with Streams | Traditional connections |

| Enterprise Applications | AWS-centric | Broad ecosystem support |

| Analytics | Limited native, export to specialized services | Rich analytical capabilities |

| GIS Applications | No native support | PostGIS extension |

| Multi-cloud | Limited to AWS | Available on all clouds |

Security Features

DynamoDB Security

DynamoDB security features include:

-

Access Control:

- Fine-grained IAM policies

- Condition expressions for row-level security

- IAM roles for applications

-

Encryption:

- Encryption at rest by default

- AWS-owned, AWS managed, or customer managed keys (KMS)

- Secure transport (HTTPS/TLS)

-

VPC Endpoints: Keep traffic within AWS network

-

Monitoring and Audit:

- CloudTrail for API activity logging

- CloudWatch for operational monitoring

-

Compliance:

- SOC 1/2/3, PCI DSS, HIPAA, FedRAMP, GDPR, etc.

PostgreSQL Security

PostgreSQL offers comprehensive security features:

-

Access Control:

- Role-based access control

- Row and column level security

- Schema separation

- Fine-grained privileges

-

Authentication:

- Password authentication

- Certificate authentication

- LDAP/Active Directory integration

- GSSAPI/Kerberos

- SCRAM authentication

-

Encryption:

- TLS for connections

- Data-at-rest encryption (filesystem level)

- pgcrypto extension for column-level encryption

-

Audit Logging:

- Extensive logging options

- pgaudit extension for detailed audit logging

-

Network Security:

- Host-based authentication (pg_hba.conf)

- Network ACLs and security groups (when cloud-hosted)

Security Comparison

| Security Aspect | DynamoDB | PostgreSQL |

|---|---|---|

| Authentication | AWS IAM | Multiple methods |

| Authorization | IAM policies | Role-based privileges |

| Row-Level Security | Condition expressions | Row-level security policies |

| Network Security | VPC endpoints | Network ACLs, pg_hba.conf |

| Encryption at Rest | Default, KMS integration | Filesystem or column-level |

| Audit Logging | CloudTrail | Built-in logging, pgaudit |

| Compliance | AWS compliance framework | Configurable for compliance |

When to Choose DynamoDB

DynamoDB is often the better choice in these scenarios:

1. Serverless Applications

- Applications built with AWS Lambda or other serverless technologies

- Event-driven architectures

- Variable workloads with unpredictable scaling needs

- Projects requiring minimal operational overhead

2. High-Scale Applications

- Applications requiring massive scale without performance degradation

- Services needing consistent single-digit millisecond response times

- Workloads with unpredictable traffic patterns and spikes

- Global applications requiring multi-region deployment

3. Simple Access Patterns

- Key-value lookups or simple document retrieval

- Well-defined, predictable access patterns

- Limited need for complex queries or joins

- Service-oriented architectures with bounded contexts

4. AWS-Integrated Applications

- Applications deeply integrated with AWS ecosystem

- Microservices using AWS services for orchestration

- Projects leveraging AWS Amplify, AppSync, or similar technologies

- Teams with strong AWS expertise

5. Specific Use Cases

- Session management and user profiles

- Shopping carts and product catalogs

- Real-time leaderboards and gaming state

- IoT data ingestion

- Time-series data with simple access patterns

- Content metadata (with larger content in S3)

When to Choose PostgreSQL

PostgreSQL is typically better suited for these scenarios:

1. Complex Query Requirements

- Applications requiring sophisticated queries and joins

- Business intelligence and reporting systems

- Data with complex relationships between entities

- Systems needing advanced analytical capabilities

2. Strong Transactional Requirements

- Financial systems with complex transaction logic

- Applications requiring advanced ACID guarantees

- Workflows with complex integrity constraints

- Systems needing fine-tuned transaction isolation

3. Schema Enforcement

- Applications benefiting from strict data validation

- Regulated environments requiring schema governance

- Complex domains with well-defined data relationships

- Teams preferring explicit schema migrations

4. Specialized Workloads

- Geospatial applications (using PostGIS)

- Full-text search requirements

- Applications using PostgreSQL-specific features

- Time-series data with complex query patterns

- Graph data with recursive relationship queries

5. Database Expertise

- Teams with strong SQL and relational database skills

- Organizations with existing PostgreSQL expertise

- Projects with access to database administration resources

- Applications where query optimization is critical

Hybrid Approaches

Many modern architectures benefit from using both databases for different aspects of an application:

1. Polyglot Persistence

- Use DynamoDB for high-velocity operational data

- Use PostgreSQL for complex relational data and analytics

- Establish synchronization patterns between them

2. CQRS Pattern

- Command Query Responsibility Segregation

- Write operations to DynamoDB for scalability

- Replicate data to PostgreSQL for complex querying

- Use DynamoDB Streams to keep PostgreSQL updated

3. Specialized Usage

- User sessions and profiles in DynamoDB

- Business core data in PostgreSQL

- Event sourcing with DynamoDB Streams

- Analytics and reporting in PostgreSQL

4. Migration Strategy

- Start with PostgreSQL for familiar development

- Migrate performance-critical components to DynamoDB

- Maintain PostgreSQL for complex queries and reporting

Migration Considerations

When migrating between PostgreSQL and DynamoDB, consider these factors:

PostgreSQL to DynamoDB

-

Data Modeling Transformation:

- Denormalize relational schemas

- Design around access patterns

- Implement single-table design where appropriate

- Plan for duplicating data for query efficiency

-

Query Adaptation:

- Rewrite complex SQL as multiple simpler operations

- Move joins to application code

- Implement client-side filtering and aggregation

- Create additional indexes for secondary access patterns

-

Migration Tools:

- AWS Database Migration Service (DMS)

- AWS Data Pipeline

- Custom ETL with AWS Glue

-

Challenges:

- Adapting to NoSQL data modeling

- Handling complex transactions

- Rewriting complex queries

- Moving business logic from SQL to application code

DynamoDB to PostgreSQL

-

Schema Design:

- Define proper relational schema

- Normalize denormalized data

- Create appropriate constraints and relationships

- Design indexes for performance

-

Data Transformation:

- Split single-table designs into multiple related tables

- Convert nested attributes to related tables

- Create join tables for many-to-many relationships

- Extract data from composite keys

-

Migration Tools:

- Export to S3 and import to PostgreSQL

- Custom ETL with AWS Glue or similar

- Application-level migration scripts

-

Challenges:

- Maintaining performance for key-based access patterns

- Handling schema evolution

- Scaling for high-throughput workloads

- Managing connection pooling and resources

Switching from Dynobase? Try Dynomate

Developers are switching to Dynomate for these key advantages:

Better Multi-Profile Support

- Native AWS SSO integration

- Seamless profile switching

- Multiple accounts in a single view

Developer-Focused Workflow

- Script-like operation collections

- Chain data between operations

- Full AWS API logging for debugging

Team Collaboration

- Git-friendly collection sharing

- No account required for installation

- Local-first data storage for privacy

Privacy & Security

- No account creation required

- 100% local data storage

- No telemetry or usage tracking

Conclusion

Both Amazon DynamoDB and PostgreSQL are powerful database systems, but they serve different needs and have distinct strengths and trade-offs:

DynamoDB excels at:

- Serverless, fully managed operation

- Virtually unlimited scalability with consistent performance

- Simple, key-based access patterns

- Global distribution and high availability

- Integration with AWS services

- Minimal operational overhead

PostgreSQL excels at:

- Complex relational data models

- Rich, flexible querying capabilities

- Strong transactional guarantees

- Extensibility and specialized workloads

- Advanced data integrity features

- Familiar SQL interface and tooling

The right choice depends on your specific requirements, team expertise, operational constraints, and application characteristics. Many modern applications benefit from a polyglot persistence approach, using both databases for the workloads they handle best.

Dynomate: Modern DynamoDB GUI Client

Built for real developer workflows with AWS profile integration, multi-session support, and team collaboration.

No account needed. Install and start using immediately.

- Table browsing across regions

- Flexible query & scan interface

- AWS API logging & debugging

When evaluating these databases, consider:

- The complexity of your data relationships and queries

- Your scalability and performance requirements

- Your team’s expertise and familiarity

- Your operational resources and constraints

- Your existing technology stack and integrations

- Your long-term data strategy and future needs

By understanding the strengths and limitations of each database, you can make an informed decision that best supports your application’s success.