DynamoDB vs RDBMS: Key Differences Explained (2025 Guide)

DynamoDB vs Relational Databases (RDBMS): Complete Comparison Guide

When choosing a database for your application, the decision between Amazon DynamoDB and traditional relational database management systems (RDBMS) can significantly impact your application’s performance, scalability, and development approach. This comprehensive guide explores the key differences between these database paradigms to help you make an informed decision.

Table of Contents

- Introduction: DynamoDB and RDBMS at a Glance

- Data Model and Schema Design

- Query Capabilities and Flexibility

- Performance and Scalability

- Consistency Models

- Transactions and ACID Compliance

- Pricing and Cost Structure

- Management and Operational Overhead

- When to Choose DynamoDB

- When to Choose RDBMS

- Hybrid Approaches

- Migration Considerations

- Conclusion

Introduction: DynamoDB and RDBMS at a Glance

What is Amazon DynamoDB?

Amazon DynamoDB is a fully managed NoSQL database service provided by AWS. It offers seamless scalability, consistent performance at any scale, and a serverless operational model. As a key-value and document database, DynamoDB is designed for applications that need high throughput, low-latency data access without the complexity of managing database infrastructure.

What are Relational Database Management Systems (RDBMS)?

Relational Database Management Systems like MySQL, PostgreSQL, Oracle, or SQL Server use a structured approach based on tables, rows, and columns, with relationships defined between tables. They rely on SQL (Structured Query Language) for data manipulation and enforce schema constraints to maintain data integrity.

Core Philosophical Differences

| Aspect | DynamoDB | RDBMS |

|---|---|---|

| Design Philosophy | Optimized for scale, performance, and operational simplicity | Optimized for data integrity, complex queries, and normalized data |

| Data Structure | Schema-less, flexible document structure | Rigid schema with typed columns and constraints |

| Development Pattern | Design for access patterns first | Design for data relationships first |

| Query Approach | Simple, key-based access with limited query options | Rich SQL language with complex joins and aggregations |

| Scaling Strategy | Horizontal scaling (partition-based) | Traditionally vertical scaling, with some horizontal options |

Data Model and Schema Design

DynamoDB’s Flexible Schema

DynamoDB uses a schema-less data model where:

- Each item (row) is a collection of attributes (fields)

- Items in the same table can have different attributes

- Only the primary key attributes are required

- Attributes can contain scalar values, sets, lists, or nested maps

- New attributes can be added to items at any time

This flexibility allows for easy evolution as application requirements change and makes working with heterogeneous data more straightforward.

Dynomate: Modern DynamoDB GUI Client

Built for real developer workflows with AWS profile integration, multi-session support, and team collaboration.

No account needed. Install and start using immediately.

- Table browsing across regions

- Flexible query & scan interface

- AWS API logging & debugging

Example DynamoDB Item:

{

"UserID": "U12345",

"Name": "John Smith",

"Email": "john@example.com",

"Preferences": {

"Theme": "Dark",

"Notifications": true

},

"DeviceIDs": ["D001", "D087", "D456"],

"LastLogin": 1657843230

}RDBMS’s Structured Schema

Relational databases use a structured approach where:

- Tables have predefined columns with specific data types

- Schema changes require explicit ALTER TABLE operations

- Relationships between tables are defined through foreign keys

- Normalization principles minimize data redundancy

- Constraints enforce data integrity (e.g., unique, not null)

This structure ensures data consistency but can be less flexible when requirements change frequently.

Example RDBMS Tables:

CREATE TABLE Users (

UserID VARCHAR(10) PRIMARY KEY,

Name VARCHAR(100) NOT NULL,

Email VARCHAR(100) UNIQUE NOT NULL,

LastLogin TIMESTAMP

);

CREATE TABLE UserPreferences (

UserID VARCHAR(10),

Setting VARCHAR(50),

Value VARCHAR(255),

PRIMARY KEY (UserID, Setting),

FOREIGN KEY (UserID) REFERENCES Users(UserID)

);

CREATE TABLE UserDevices (

UserID VARCHAR(10),

DeviceID VARCHAR(10),

DateAdded TIMESTAMP,

PRIMARY KEY (UserID, DeviceID),

FOREIGN KEY (UserID) REFERENCES Users(UserID)

);Key Modeling Differences

DynamoDB:

- Denormalization is common and encouraged

- Single-table design patterns can store multiple entity types together

- Composite keys create hierarchical relationships

- Data duplication is acceptable to optimize for query patterns

RDBMS:

- Normalization minimizes redundancy

- Multiple tables with relationships represent different entities

- Foreign keys and joins create relationships between data

- Third normal form (3NF) is a common design goal

Query Capabilities and Flexibility

DynamoDB’s Access Patterns

DynamoDB provides targeted but limited query capabilities:

- Primary Key Access: Direct retrieval using partition key or partition key + sort key

- Secondary Indexes: Global Secondary Indexes (GSIs) and Local Secondary Indexes (LSIs) for alternative access patterns

- Query Operation: Finds items with the same partition key that match sort key conditions

- Scan Operation: Examines every item in a table (expensive and discouraged for large tables)

- No Joins: Multi-table operations must be handled by the application

- Limited Filters: FilterExpression can filter results but doesn’t reduce read capacity used

Example DynamoDB Query:

// Find all orders for a specific customer created in the last 30 days

const params = {

TableName: 'Orders',

KeyConditionExpression: 'CustomerID = :custId AND OrderDate > :startDate',

ExpressionAttributeValues: {

':custId': 'C12345',

':startDate': Date.now() - (30 * 24 * 60 * 60 * 1000)

}

};

dynamodb.query(params, (err, data) => {

if (err) console.error(err);

else console.log(data.Items);

});RDBMS SQL Flexibility

Relational databases offer rich query capabilities through SQL:

- Complex Joins: Combine data from multiple tables

- Aggregations: Functions like COUNT, SUM, AVG, MIN, MAX

- Subqueries: Nested queries for complex operations

- Window Functions: Analytical calculations across rows

- Common Table Expressions (CTEs): Temporary result sets for complex logic

- Full-text Search: Search capabilities for text fields (varies by platform)

Example SQL Query:

-- Find customers who have placed more than 5 orders with a total value over $1000

SELECT

c.CustomerID,

c.Name,

COUNT(o.OrderID) AS OrderCount,

SUM(o.TotalAmount) AS TotalSpent

FROM

Customers c

JOIN

Orders o ON c.CustomerID = o.CustomerID

WHERE

o.OrderDate > DATE_SUB(CURRENT_DATE, INTERVAL 90 DAY)

GROUP BY

c.CustomerID, c.Name

HAVING

COUNT(o.OrderID) > 5 AND SUM(o.TotalAmount) > 1000

ORDER BY

TotalSpent DESC;Key Query Differences

| Aspect | DynamoDB | RDBMS |

|---|---|---|

| Query Language | Limited API operations with expressions | Full SQL with rich syntax and functions |

| Joins | No native joins | Complex multi-table joins supported |

| Filtering | Limited filtering capabilities | Extensive WHERE clause capabilities |

| Aggregations | No built-in aggregations | Comprehensive aggregation functions |

| Query Flexibility | Access patterns must be defined upfront | Ad-hoc queries possible at any time |

| Query Performance | Predictable, based on key structure | Varies based on indexes, query complexity |

Performance and Scalability

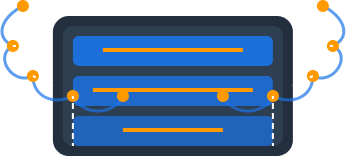

DynamoDB’s Scaling Model

DynamoDB delivers consistent performance through automatic, horizontal scaling:

- Partitioning: Data is automatically distributed across partitions

- Throughput Control: Specify read and write capacity units or use on-demand mode

- Adaptive Capacity: Automatically handles uneven access patterns

- Unlimited Scale: No practical limit on data size or throughput

- Auto Scaling: Automatically adjusts capacity based on usage patterns

- Consistent Latency: Single-digit millisecond response times regardless of size

Performance metrics:

- Can handle millions of requests per second

- Supports tables of virtually any size (petabytes of data)

- Maintains consistent performance as data grows

RDBMS Scaling Approaches

Relational databases traditionally scale vertically but have evolved:

- Vertical Scaling: Larger servers with more CPU, memory, and storage

- Read Replicas: Distribute read workloads across multiple instances

- Sharding: Manually partition data across multiple database instances

- Connection Pooling: Manage database connections efficiently

- Query Optimization: Indexes, query tuning, and execution plans

- Database Caching: Cache query results to improve performance

Scaling challenges:

- Joins across shards can be complex and inefficient

- Vertical scaling has physical and cost limitations

- Maintaining consistency across distributed instances is challenging

Performance Comparison

| Characteristic | DynamoDB | RDBMS |

|---|---|---|

| Latency | Predictable, single-digit milliseconds | Variable, depends on query complexity and data size |

| Throughput | Virtually unlimited with appropriate partition keys | Limited by server resources and connection limits |

| Data Size Handling | Scales horizontally without performance degradation | Performance can degrade with very large tables |

| Concurrent Users | Handles high concurrency without degradation | May face connection limits or lock contention |

| Performance Predictability | Highly predictable | Can vary based on query plans and data distribution |

Consistency Models

DynamoDB Consistency Options

DynamoDB offers two consistency models:

-

Eventually Consistent Reads (Default):

- Lower cost (half the RCU consumption)

- Higher throughput

- May not reflect the most recent write

- Consistency typically achieved within a second

-

Strongly Consistent Reads:

- Higher cost (double the RCU consumption)

- Lower throughput

- Always returns the most up-to-date data

- Not available for Global Secondary Indexes

RDBMS Consistency Model

Relational databases typically provide:

- Strong Consistency by Default: All operations work with the latest data

- Transaction Isolation Levels: Control consistency vs. performance trade-offs

- Read Uncommitted

- Read Committed

- Repeatable Read

- Serializable

- Locking Mechanisms: Pessimistic locking prevents conflicts

- MVCC (Multi-Version Concurrency Control): In systems like PostgreSQL

Transactions and ACID Compliance

DynamoDB Transactions

DynamoDB added transaction support in 2018 with some limitations:

- Supports atomic, consistent, isolated, and durable (ACID) transactions

- Can include up to 25 items or 4MB of data (whichever is smaller)

- Works across multiple tables in the same region

- Costs twice as much as non-transactional operations

- No partial commits - all changes succeed or fail together

- Cannot span across regions

Example DynamoDB Transaction:

const params = {

TransactItems: [

{

Put: {

TableName: 'Orders',

Item: {

OrderID: 'O12345',

CustomerID: 'C789',

Amount: 99.95,

Status: 'Pending'

},

ConditionExpression: 'attribute_not_exists(OrderID)'

}

},

{

Update: {

TableName: 'Customers',

Key: { CustomerID: 'C789' },

UpdateExpression: 'SET OrderCount = OrderCount + :inc',

ExpressionAttributeValues: { ':inc': 1 }

}

},

{

Update: {

TableName: 'Inventory',

Key: { ProductID: 'P456' },

UpdateExpression: 'SET Stock = Stock - :dec',

ConditionExpression: 'Stock >= :dec',

ExpressionAttributeValues: { ':dec': 1 }

}

}

]

};

dynamodb.transactWriteItems(params, (err, data) => {

if (err) console.error(err);

else console.log('Transaction successful');

});RDBMS Transactions

Relational databases have mature transaction support:

- Full ACID compliance by default

- No practical limits on transaction size

- Complex transaction patterns (save points, nested transactions)

- Customizable isolation levels to balance performance and consistency

- Transactions are a core feature, not an add-on

- Long-running transactions supported (though discouraged)

Example SQL Transaction:

BEGIN TRANSACTION;

-- Create new order

INSERT INTO Orders (OrderID, CustomerID, Amount, Status)

VALUES ('O12345', 'C789', 99.95, 'Pending');

-- Update customer order count

UPDATE Customers

SET OrderCount = OrderCount + 1

WHERE CustomerID = 'C789';

-- Update inventory

UPDATE Inventory

SET Stock = Stock - 1

WHERE ProductID = 'P456' AND Stock >= 1;

-- Check if inventory update was successful

IF @@ROWCOUNT = 0

BEGIN

ROLLBACK TRANSACTION;

RAISERROR('Insufficient stock', 16, 1);

RETURN;

END

COMMIT TRANSACTION;Pricing and Cost Structure

DynamoDB Pricing Model

DynamoDB’s pricing is based on:

-

Provisioned Capacity Mode:

- Read Capacity Units (RCUs): ~$0.00013 per RCU-hour

- Write Capacity Units (WCUs): ~$0.00065 per WCU-hour

- Pay for provisioned capacity regardless of usage

- Auto-scaling can adjust capacity based on demand

-

On-Demand Capacity Mode:

- ~$0.25 per million read requests

- ~$1.25 per million write requests

- Pay only for what you use

- Higher per-request cost but no capacity planning

-

Storage: ~$0.25 per GB-month

-

Additional Costs:

- Data transfer out

- Global Tables (replication)

- Backups and restores

- Reserved capacity option for cost savings

RDBMS Pricing Model

RDBMS pricing typically follows:

- Instance-Based: Pay for the server instance size regardless of utilization

- License Costs: Commercial databases may have license fees

- Storage Costs: Typically priced per GB-month

- I/O Costs: Some cloud providers charge for I/O operations

- Backup Costs: Storage for backups and snapshot features

- High Availability: Additional costs for standby instances

- Scaling Costs: Vertical scaling often requires downtime and larger instances

Cost Comparison Scenarios

-

Small Application with Variable Traffic:

- DynamoDB with on-demand capacity can be more cost-effective

- Pay only for actual usage, scale to zero possible

- Free tier makes initial use very affordable

-

Large, Predictable Workload:

- RDBMS might be more cost-effective for consistent, high-throughput

- Reserved instances for RDBMS can provide significant savings

- DynamoDB reserved capacity also reduces costs

-

Read-Heavy Applications:

- RDBMS with read replicas can be efficient

- DynamoDB with DAX caching can optimize costs for repeated reads

-

Write-Heavy Applications:

- DynamoDB often more cost-effective for high-write applications

- RDBMS write scaling can become expensive

Management and Operational Overhead

DynamoDB’s Management Model

DynamoDB is a fully managed service with minimal operational overhead:

- No Server Management: No instances to provision or maintain

- No Software Maintenance: No patches, upgrades, or migrations

- Automated Scaling: No manual intervention for scaling operations

- Automated Backups: Point-in-time recovery for the last 35 days

- High Availability: Multi-AZ replication built-in

- Monitoring: CloudWatch integration for performance metrics

The operational requirements are primarily around:

- Capacity planning and cost optimization

- Access pattern design and table structure

- Permission management through IAM

RDBMS Management Requirements

Relational databases typically require more operational attention:

- Server Provisioning: Selecting and configuring instances

- Software Updates: Regular patching and version upgrades

- Backup Management: Setting up, monitoring, and testing backups

- High Availability: Configuring replication and failover

- Performance Tuning: Index optimization, query tuning, configuration

- Schema Management: Migrations, changes, and version control

- Storage Management: Disk space monitoring and expansion

- Security Configuration: Network security, credential management

Even with managed RDS services, many of these tasks still require planning and oversight.

Familiar with these Dynamodb Challenges ?

- Writing one‑off scripts for simple DynamoDB operations

- Constantly switching between AWS profiles and regions

- Sharing and managing database operations with your team

You should try Dynomate GUI Client for DynamoDB

- Create collections of operations that work together like scripts

- Seamless integration with AWS SSO and profile switching

- Local‑first design with Git‑friendly sharing for team collaboration

Operational Comparison

| Aspect | DynamoDB | RDBMS |

|---|---|---|

| Setup Time | Minutes | Hours to days |

| Maintenance Windows | None required | Regular downtime for updates |

| Team Skills Required | AWS knowledge, NoSQL patterns | DBA skills, SQL expertise |

| Monitoring Complexity | Simple metrics, few failure modes | Complex monitoring, many parameters |

| Disaster Recovery | Automated, built-in | Manual planning and testing |

| Security Management | IAM-based, simplified | Complex user/role permissions |

When to Choose DynamoDB

DynamoDB is generally the better choice when:

1. Scalability is a Primary Concern

- Applications expecting unpredictable growth

- Services needing to handle sudden traffic spikes

- Systems requiring scale beyond what’s practical with a single database instance

2. Low-Latency Requirements

- Applications requiring consistent single-digit millisecond response times

- Use cases where performance predictability is critical

- Global applications needing low latency across regions (using Global Tables)

3. Serverless Architecture

- Applications built using AWS Lambda and other serverless technologies

- Projects aiming to minimize operational overhead

- Startups wanting to focus on product rather than infrastructure

4. Simple, Known Access Patterns

- Applications with straightforward data access needs

- Systems where access patterns are well-understood in advance

- Use cases focused on high-throughput key-value lookups

5. Specific Use Cases

- Session management and user profiles

- Shopping carts and product catalogs

- Gaming leaderboards and player states

- IoT data ingestion and time-series data

- Real-time analytics with known dimensions

Real-World DynamoDB Success: Lyft

Lyft uses DynamoDB to handle ride data and customer interactions, processing millions of transactions per second during peak times. They chose DynamoDB for its ability to:

- Scale seamlessly during high-demand periods

- Maintain consistent performance regardless of database size

- Minimize operational overhead through AWS’s managed service

- Support their microservices architecture with a flexible schema

When to Choose RDBMS

Relational databases remain the better choice when:

1. Complex Query Requirements

- Applications requiring complex joins across multiple tables

- Systems needing sophisticated reporting and analytics

- Use cases involving aggregations and data summarization

- Applications where ad-hoc queries are a core requirement

2. Strong Transactional Requirements

- Financial systems requiring complex multi-table transactions

- Applications with sophisticated integrity constraints

- Use cases requiring isolation levels and transaction controls

- Systems where data relationships must be strictly enforced

3. Legacy System Integration

- Integration with existing relational database systems

- Applications built with ORMs or SQL-dependent frameworks

- Teams with strong SQL expertise but limited NoSQL experience

- Systems leveraging database-specific features (stored procedures, triggers)

4. Data Structure Focus

- Applications where data structure is more critical than access patterns

- Systems where relationships between entities are complex and fluid

- Use cases requiring normalized data to minimize redundancy

- Projects where schema enforcement is a positive feature

5. Specific Use Cases

- Complex ERP and accounting systems

- Traditional CRM with complex relationship modeling

- Business intelligence and reporting systems

- Applications requiring full-text search capabilities

- Systems with complex permissions and role-based access

Real-World RDBMS Success: Slack

Slack relies heavily on relational databases (PostgreSQL) for their messaging platform because they need:

- Complex queries across user, channel, and message data

- Strong transactional guarantees for message delivery

- Rich text search capabilities

- Advanced data integrity features

- Support for complex access control logic

Hybrid Approaches

Many modern applications benefit from a polyglot persistence approach, using both DynamoDB and RDBMS for different aspects:

1. DynamoDB for High-Throughput Data

- Session data and user profiles

- Activity logs and event tracking

- Real-time features requiring low latency

- High-volume transactional data

2. RDBMS for Complex Relationships

- Core business data with complex relationships

- Reporting and analytics datasets

- Data requiring complex integrity constraints

- Legacy system integration points

3. Data Synchronization Patterns

- Use DynamoDB Streams to replicate changes to RDBMS

- Implement CQRS pattern (Command Query Responsibility Segregation)

- Create materialized views in DynamoDB from RDBMS data

- Use AWS Database Migration Service (DMS) for ongoing replication

Example Hybrid Architecture

┌──────────────┐ Write ┌──────────────┐ ┌──────────────┐

│ Application │────────────>│ DynamoDB │ │ S3 Data Lake │

│ Servers │<───────────>│ (Real-time │ │ (Historical │

└──────────────┘ Read │ Data) │ │ Data) │

│ └──────────────┘ └──────────────┘

│ │ ↑

│ │ Streams │

│ ▼ │

│ ┌──────────────┐ │

│ Complex │ Lambda │ │

│ Queries │ Functions │───────────┘

│ └──────────────┘ Export

│ │

│ ▼

│ ┌──────────────┐

└────────────────────>│ RDBMS │

Read │ (Reporting & │

│ Analytics) │

└──────────────┘Migration Considerations

When migrating between RDBMS and DynamoDB, consider these key factors:

Migrating from RDBMS to DynamoDB

-

Data Modeling Transformation:

- Denormalize your relational model

- Identify access patterns before designing tables

- Consider single-table design for related entities

- Plan for duplicating data to support multiple access patterns

-

Query Rewriting:

- Replace complex SQL queries with multiple simpler queries

- Implement application-side joins where necessary

- Redesign aggregation operations

- Precompute and store values that would typically be calculated on-the-fly

-

Migration Tools:

- AWS Database Migration Service (DMS)

- AWS Data Pipeline

- Custom ETL processes using AWS Glue

-

Common Challenges:

- Handling schema evolution during migration

- Maintaining data consistency during transition

- Retraining team on NoSQL data modeling

- Modifying application code to work with DynamoDB API

Migrating from DynamoDB to RDBMS

-

Schema Design:

- Normalize the denormalized DynamoDB structure

- Define appropriate tables and relationships

- Implement constraints and foreign keys

- Design appropriate indexes

-

Data Transformation:

- Extract nested attributes into separate tables

- Convert sets and lists to relational structures

- Transform single-table designs into multiple related tables

- Handle schema variations across items

-

Migration Tools:

- AWS Glue for ETL processing

- Custom scripts using DynamoDB Scan operations

- Temporary staging tables for complex transformations

-

Common Challenges:

- Performance during the migration process

- Handling DynamoDB’s flexible attribute structure

- Implementing similar performance characteristics

- Adapting application code to SQL operations

Switching from Dynobase? Try Dynomate

Developers are switching to Dynomate for these key advantages:

Better Multi-Profile Support

- Native AWS SSO integration

- Seamless profile switching

- Multiple accounts in a single view

Developer-Focused Workflow

- Script-like operation collections

- Chain data between operations

- Full AWS API logging for debugging

Team Collaboration

- Git-friendly collection sharing

- No account required for installation

- Local-first data storage for privacy

Privacy & Security

- No account creation required

- 100% local data storage

- No telemetry or usage tracking

Conclusion

The choice between DynamoDB and traditional RDBMS ultimately depends on your application’s specific requirements, your team’s expertise, and your business priorities.

DynamoDB Excels When:

- Scalability and performance predictability are paramount

- Operational simplicity is a key consideration

- Your application has well-defined access patterns

- You’re building serverless or highly distributed systems

- Low-latency, high-throughput operations are critical

RDBMS Remains Superior When:

- Data relationships and integrity are complex

- Ad-hoc querying and analytics are required

- Your team has deep SQL expertise

- Transaction complexity goes beyond basic operations

- Data normalization and structure are more important than access patterns

Many modern architectures leverage both database types, using each for its strengths while mitigating its weaknesses. The rise of microservices architectures has made this polyglot persistence approach increasingly common and practical.

Regardless of your choice, remember that data modeling decisions have long-term implications for your application’s performance, maintainability, and scalability. Take time to thoroughly analyze your requirements and test your assumptions before committing to either approach.