DynamoDB vs. S3: When to Use Each AWS Storage Service in 2025

When building applications on AWS, one of the most fundamental decisions is where and how to store your data. Amazon DynamoDB and Amazon S3 are two of the most popular storage services in the AWS ecosystem, but they serve very different purposes. Understanding when to use each service—or how to use them together—can significantly impact your application’s performance, cost-efficiency, and scalability.

In this comprehensive comparison, we’ll explore the key differences between DynamoDB and S3, analyze their optimal use cases, and provide practical guidance on choosing the right service for your specific needs.

Understanding DynamoDB and S3: Fundamentals

Before diving into comparisons, let’s clarify what each service is designed to do:

Amazon DynamoDB

DynamoDB is a fully managed NoSQL database service built for low-latency data access at any scale. As a key-value and document database, it’s optimized for quick, consistent performance with single-digit millisecond response times.

Key characteristics:

- Type: NoSQL database (key-value and document store)

- Primary use: Storing structured data that needs fast, frequent access

- Access method: API calls via AWS SDK

- Consistency: Option for both eventual and strong consistency

- Latency: Single-digit millisecond response times

- Cost model: Pay for read/write capacity and storage

Amazon S3 (Simple Storage Service)

S3 is an object storage service built for storing and retrieving any amount of data from anywhere on the web. It’s designed for durability, availability, and virtually unlimited scalability.

Key characteristics:

- Type: Object storage

- Primary use: Storing files, images, backups, and large unstructured data

- Access method: HTTP/S requests, API calls, direct URL access

- Consistency: Strong read-after-write consistency for all operations

- Latency: Typically 100-200ms for first byte access

- Cost model: Pay for storage, requests, and data transfer

Data Model Comparison: Structured vs Unstructured

The fundamental difference between these services lies in how they model and store data:

DynamoDB Data Model

- Structure: Data is stored as items (similar to rows) containing attributes (similar to columns)

- Size limits: Maximum item size of 400KB

- Schema: Flexible schema with required primary key

- Organization: Tables with items accessible via primary key and optional secondary indexes

- Query capability: Access patterns defined by keys and indexes

- Relationships: Single-table design can model relationships, but no native JOIN support

S3 Data Model

- Structure: Data is stored as objects (files) within buckets (similar to top-level folders)

- Size limits: Individual objects from 0 bytes to 5TB

- Schema: No schema; objects are stored as-is with optional metadata

- Organization: Flat structure with prefix-based organization (folder-like)

- Query capability: Limited to prefix/suffix filtering; no native query language

- Relationships: No native concept of relationships between objects

Performance Characteristics

Performance is where these services differ dramatically, as they’re optimized for different access patterns:

DynamoDB Performance

- Read/Write latency: Consistent single-digit millisecond response times

- Throughput: Can be provisioned or on-demand, scaling to millions of requests per second

- Concurrency: High concurrent access with no degradation

- Caching: DAX (DynamoDB Accelerator) for microsecond response times on cached reads

- Scaling: Automatic partitioning to handle increased load

- Optimization: Performance tied to key design and access patterns

S3 Performance

- Read/Write latency: First-byte latency typically 100-200ms

- Throughput: Scales with prefix diversity, up to thousands of requests per second per prefix

- Concurrency: High concurrent access with eventual consistency (now with strong consistency too)

- Caching: Can be used with CloudFront for improved read performance

- Scaling: Automatic and virtually unlimited

- Optimization: Performance tied to request patterns and object size

When to Use DynamoDB vs S3: Use Case Analysis

Dynomate: Modern DynamoDB GUI Client

Built for real developer workflows with AWS profile integration, multi-session support, and team collaboration.

No account needed. Install and start using immediately.

- Table browsing across regions

- Flexible query & scan interface

- AWS API logging & debugging

Determining which service to use depends primarily on your data characteristics and access patterns:

Ideal Use Cases for DynamoDB

-

User profiles and preferences

- Quick lookup by user ID

- Frequent updates to small portions of data

- Need for consistent low-latency responses

-

Product catalogs

- Fast lookups by product ID, category, or attributes

- Support for complex filtering via secondary indexes

- Frequent updates to inventory, pricing, descriptions

-

Session and state management

- Rapid storage and retrieval of session data

- TTL support for automatic expiration

- Low-latency requirements for user experience

-

Real-time applications

- Gaming leaderboards and scores

- IoT device state and telemetry

- Real-time analytics and metrics

-

Metadata storage

- Quick lookup of metadata about larger objects

- Relationships between entities

- Frequently accessed reference data

Ideal Use Cases for S3

-

Media storage

- Images, videos, audio files

- Content distribution

- User-generated content uploads

-

Backup and archival

- Database backups

- Log files

- Compliance archives with S3 Glacier

-

Data lakes

- Big data analytics repositories

- Integration with Athena, Redshift Spectrum, etc.

- Centralized storage for diverse data types

-

Static website hosting

- HTML, CSS, JavaScript files

- Single-page applications

- Website assets

-

Large file storage

- Scientific datasets

- Machine learning models

- Application binaries and packages

Cost Comparison: Economics of Storage

Cost structures differ substantially between these services, reflecting their different purposes:

DynamoDB Cost Factors

- Read/Write capacity: Pay for provisioned or consumed read/write units

- Storage: Approximately $0.25 per GB-month

- Data transfer: Free inbound, outbound charged at standard AWS rates

- Backups: Additional cost for on-demand backups and PITR

- Additional features: Global Tables, DAX, Streams have additional costs

Cost example: A 10GB table with 10 RCUs and 10 WCUs provisioned would cost approximately:

- Storage: 10GB × $0.25 = $2.50/month

- Read capacity: 10 RCUs × $0.00013 per RCU-hour × 730 hours = $0.95/month

- Write capacity: 10 WCUs × $0.00065 per WCU-hour × 730 hours = $4.75/month

- Total: $8.20/month

S3 Cost Factors

- Storage: Tiered pricing based on storage class and volume (S3 Standard ~$0.023 per GB-month)

- Requests: GET, PUT, COPY, POST, LIST requests charged per 1,000 requests

- Data transfer: Free inbound, outbound charged at standard AWS rates

- Management: Inventory, analytics, and object tagging features have additional costs

- Storage classes: Different pricing for Standard, Intelligent-Tiering, Standard-IA, One Zone-IA, Glacier

Cost example: 10GB in S3 Standard with 100,000 GET requests and 10,000 PUT requests per month:

- Storage: 10GB × $0.023 = $0.23/month

- GET requests: 100,000 ÷ 1,000 × $0.0004 = $0.04/month

- PUT requests: 10,000 ÷ 1,000 × $0.005 = $0.05/month

- Total: $0.32/month

This simple comparison illustrates why S3 is substantially more cost-effective for data that doesn’t require the performance characteristics of DynamoDB.

Access Pattern Considerations

Access patterns are perhaps the most critical factor in choosing between DynamoDB and S3:

When DynamoDB Access Patterns Make Sense

- Frequent, small reads/writes: Applications requiring many small operations

- Key-based access: When data is primarily accessed by a known key

- Low-latency requirements: User-facing applications where performance is critical

- Transactional needs: When you need ACID transactions across multiple items

- Conditional updates: When updates depend on the current state of the data

When S3 Access Patterns Make Sense

- Whole-object reads/writes: When you typically read or write entire objects

- Infrequent access: Data that isn’t accessed frequently but must be available when needed

- Sequential access: Large files that are read sequentially (logs, video)

- Public accessibility: Content that needs direct URL access or public distribution

- Write-once, read-many: Data that rarely changes but is read frequently

Security Comparison

Both services offer robust security capabilities, with some differences in implementation:

DynamoDB Security Features

- Access control: Fine-grained control via IAM policies and condition expressions

- Encryption: Automatic encryption at rest with AWS managed keys or CMKs

- VPC endpoints: Private access within a VPC without traversing the internet

- Monitoring: CloudTrail integration for API call logging

- Authentication: IAM roles and temporary credentials

S3 Security Features

- Access control: Bucket policies, ACLs, and IAM policies

- Encryption: Server-side and client-side encryption options

- VPC endpoints: Gateway endpoints for private access

- Monitoring: CloudTrail, access logs, and S3 event notifications

- Authentication: IAM, presigned URLs, and direct HTTPS endpoints

- Public access blocks: Prevent unintended public exposure

Best of Both Worlds: Hybrid Approaches

Familiar with these Dynamodb Challenges ?

- Writing one‑off scripts for simple DynamoDB operations

- Constantly switching between AWS profiles and regions

- Sharing and managing database operations with your team

You should try Dynomate GUI Client for DynamoDB

- Create collections of operations that work together like scripts

- Seamless integration with AWS SSO and profile switching

- Local‑first design with Git‑friendly sharing for team collaboration

In many real-world scenarios, the optimal solution involves using both services together:

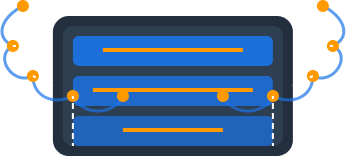

Strategy 1: DynamoDB for Metadata, S3 for Content

A common pattern is storing large objects in S3 while keeping their metadata in DynamoDB:

User uploads a video:

1. Video file → stored in S3

2. Video metadata (title, description, creation date, user ID, S3 URL) → stored in DynamoDB

3. Application queries DynamoDB to quickly retrieve metadata

4. When needed, application accesses S3 using the stored URL/keyBenefits:

- Fast queries for finding content based on various attributes

- Cost-effective storage for large files

- Ability to update metadata without touching the content

Example: A media-sharing platform might store user-generated videos in S3 for cost-effective storage while keeping metadata in DynamoDB for fast querying by user, tags, categories, and other attributes.

Strategy 2: S3 as DynamoDB Backup Destination

Using S3 as a backup repository for DynamoDB data:

Scheduled backup workflow:

1. Export DynamoDB table to S3 using AWS Data Pipeline or custom script

2. Apply lifecycle policies on S3 to manage backup retention

3. Import from S3 to restore DynamoDB data when neededBenefits:

- Cost-effective long-term storage for backups

- Ability to process backup data with other AWS analytics services

- Cross-region replication for disaster recovery

Example: An e-commerce application might maintain active orders in DynamoDB for fast processing while archiving completed orders to S3 for cost-effective long-term storage and future analytics.

Strategy 3: S3 Events Triggering DynamoDB Updates

Using S3 event notifications to update DynamoDB when objects change:

When a new document is uploaded to S3:

1. S3 event notification triggers Lambda function

2. Lambda extracts metadata from the document

3. Lambda updates DynamoDB with the extracted metadata

4. Application can query DynamoDB to find documents matching criteriaBenefits:

- Automatic synchronization between object storage and metadata

- Enables sophisticated searching capabilities for objects in S3

- Maintains separation of concerns between storage and indexing

Example: A document management system might store documents in S3 while automatically extracting and indexing metadata in DynamoDB for fast searching and filtering.

Decision Framework: Choosing the Right Service

To systematically decide which service is right for your data, consider these questions:

-

What is the nature of your data?

- Small, structured records → DynamoDB

- Large files or binary data → S3

-

What are your access patterns?

- Key-based lookups, high read/write frequency → DynamoDB

- Whole-object access, HTTP-based delivery → S3

-

What are your latency requirements?

- Consistent < 10ms response time → DynamoDB

- 100-200ms acceptable → S3

-

What is your budget sensitivity?

- Performance priority over cost → Consider DynamoDB

- Cost priority over performance → Consider S3

-

What is your data size?

- Individual items < 400KB → DynamoDB can work

- Items > 400KB or large binary files → S3 required

-

What query capabilities do you need?

- Complex filtering, secondary access patterns → DynamoDB

- Simple key-based retrieval for entire objects → S3

-

Do you need transactional capabilities?

- ACID transactions required → DynamoDB

- Simple PUT/GET semantics sufficient → S3

Real-World Examples: DynamoDB and S3 in Action

Example 1: E-commerce Platform

Scenario: An e-commerce platform needs to store product information, user profiles, shopping carts, and product images.

Solution:

- DynamoDB tables:

- Products (metadata, pricing, inventory, categories)

- Users (profiles, preferences, order history)

- Carts (active shopping carts and their contents)

- Orders (completed orders and fulfillment status)

- S3 buckets:

- Product images (high-resolution photos in multiple formats)

- User uploads (custom product configurations)

- Invoice PDFs (generated documents)

Integration: The product table in DynamoDB contains S3 URLs to the product images, allowing fast queries for products while storing the actual images cost-effectively.

Example 2: Content Management System

Scenario: A content management system needs to store articles, images, videos, and user comments.

Solution:

- DynamoDB tables:

- Content (metadata, status, author, publication date)

- Users (profiles, permissions)

- Comments (linked to content items)

- Analytics (view counts, engagement metrics)

- S3 buckets:

- Article assets (images, videos, attachments)

- User-generated content (uploads, profile pictures)

- Static website assets (CSS, JavaScript, fonts)

Integration: Article content could be stored in either S3 (for long-form content with rich media) or DynamoDB (for shorter content), with DynamoDB being used for fast querying of articles by various criteria.

Example 3: IoT Application

Scenario: An IoT platform collects sensor data from devices and needs to store both real-time state and historical metrics.

Solution:

- DynamoDB tables:

- Devices (metadata, current state, settings)

- Alerts (active issues requiring attention)

- CurrentReadings (latest values from each sensor)

- S3 buckets:

- HistoricalData (time-series data from sensors)

- DeviceLogs (detailed operational logs)

- Firmware (device software updates)

Integration: Current device state is maintained in DynamoDB for fast access, while historical data is regularly archived to S3 for cost-effective storage and long-term analytics.

Conclusion: Complementary Services for Modern Applications

Switching from Dynobase? Try Dynomate

Developers are switching to Dynomate for these key advantages:

Better Multi-Profile Support

- Native AWS SSO integration

- Seamless profile switching

- Multiple accounts in a single view

Developer-Focused Workflow

- Script-like operation collections

- Chain data between operations

- Full AWS API logging for debugging

Team Collaboration

- Git-friendly collection sharing

- No account required for installation

- Local-first data storage for privacy

Privacy & Security

- No account creation required

- 100% local data storage

- No telemetry or usage tracking

DynamoDB and S3 are not competing services but complementary tools designed for different aspects of data storage. DynamoDB excels at storing structured data that requires frequent, low-latency access via key-based lookups. S3 shines as a cost-effective, highly durable solution for storing large objects that don’t need millisecond access times.

Rather than choosing between them, modern applications often leverage both services together:

- DynamoDB for operational data: User accounts, product information, game state, metadata

- S3 for large content and less frequently accessed data: Media files, documents, archives, logs

By understanding the strengths and limitations of each service, you can design architecture that places your data in the optimal storage location based on its size, access pattern, and performance requirements.

When building applications that work with DynamoDB, consider using Dynomate to simplify table management, visualization, and query development. For integrated solutions using both DynamoDB and S3, Dynomate can help you design and optimize your DynamoDB tables while maintaining references to your S3 objects.

What’s your experience with DynamoDB and S3? Are you using them separately or together in your architecture? Share your insights in the comments below!