Chain DynamoDB Operations Like You Chain Promises

Here's the thing: every DynamoDB script you write is technical debt.

We built Query Requests so you can ship features, not maintain scripts.

Let's Talk About That Script You Wrote Last Week

You know the one. It queries the user table, grabs an email, hits the logs table, then maybe touches two more tables.

It works great... until someone needs to run it with different parameters. Or in staging. Or when that new engineer joins and asks "hey, where's that script you used?"

I've been there. At my last company, we had a /scripts folder with 73 DynamoDB utilities.

Seventy. Three.

Half of them did similar things. Nobody knew which ones still worked. Classic.

The Real Cost

- 30 minutes writing the script

- 20 minutes debugging it

- 60 minutes at 2 AM modifying for different use case

= $200+ for a one-off query

// user-debug-script-v3-final-FINAL.js (we all have this file)

const AWS = require('aws-sdk');

const dynamodb = new AWS.DynamoDB.DocumentClient();

async function whyIsThisUsersDataWeird(userId) {

try {

// First, get the user

const userProfile = await dynamodb.get({

TableName: process.env.USERS_TABLE || 'Users-prod', // hope this is right

Key: { pk: userId }

}).promise();

if (!userProfile.Item) {

console.log('User not found, checking archive table...');

// 20 more lines of fallback logic

}

const email = userProfile.Item?.email;

// Now get their logs (why is this in a different table again?)

const emailLogs = await dynamodb.query({

TableName: 'EmailLogs-' + process.env.STAGE,

KeyConditionExpression: 'email = :email',

ExpressionAttributeValues: {

':email': email

}

}).promise();

// ... 50 more lines

} catch (error) {

console.error('Check line 47, I think', error);

}

}

// TODO: Add error handling

// TODO: Make this work in staging

// TODO: Delete this after the incidentWhat If Your Queries Were Actually Reusable?

Let me show you Query Requests - they're not just another GUI wrapper around DynamoDB. They're a different mental model for how you interact with your data.

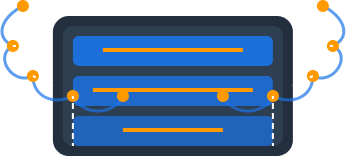

The Core Idea: Chainable Operations

A Query Request is a sequence of DynamoDB operations (Query, Update, Put, Delete) that execute in order. Here's the key insight: each operation can use results from previous operations.

Query: Get user profile

Table: ${USERS_TABLE}

pk: ${userId}Query: Get user activity

Table: ${USERS_TABLE}

pk: ${userId}#activityQuery: Check email logs using user's email

Table: ${EMAIL_LOGS_TABLE}

email: ${get_user.items[0].email}Update: Update debug timestamp

Table: ${USERS_TABLE}

pk: ${userId}See what happened there? Four different operations, potentially across different tables, all working together. No promises to chain. No callbacks to manage. Just a clean, linear flow.

Here's Where It Gets Good: Automated File Sync

Every Query Request automatically saves to your local filesystem as a YAML file:

~/dynomate/ ├── collections/ │ ├── customer-support/ │ │ ├── debug-user-issues.yaml │ │ ├── check-subscription.yaml │ │ └── refund-flow.yaml │ ├── ops/ │ │ ├── daily-metrics.yaml │ │ └── table-health-check.yaml │ └── development/ │ └── test-queries.yaml

Why this matters: git add . git commit git push

Your entire team now has the same queries. No Slack messages. No "can you send me that script?" Just pull and run.

Variables That Actually Make Sense

Three levels of variables, each with a clear purpose:

1. Environment Variables

- • Your

USERS_TABLEpoints to different ARNs in dev/staging/prod - • Switch environments with one dropdown, all queries just work

2. Request Variables

- •

userId,dateRange,customerEmail- whatever makes sense - • Your on-call can run the same query with different inputs

3. Operation Chaining

- •

$${get_user.items[0].email}- grab the email from the first query - •

$${check_inventory.count}- use the count from an earlier operation - • Build complex flows without complex code

Write once, run anywhere, with any parameters.

A Real Example From Last Week

One of our users, a fintech startup, replaced 34 customer debugging scripts with 5 query requests. Here's one they shared:

The Old Way

- • Four different scripts

- • Three Slack messages to engineering

- • 45 minutes average resolution

With Query Requests

- 1. Support runs "investigate-transaction" request

- 2. Enters customer ID

- 3. All four tables queried automatically

- 4. Results in 8 seconds

Impact

P50 resolution time dropped from 45 minutes to 12 minutes

$2,750/day saved

33 minutes × 50 tickets/day × $100/hour

The Features That Actually Matter

After talking to 200+ DynamoDB users, here's what we built:

Operation Chaining

Use any result from previous operations in subsequent queries

Multi-Environment Support

Same query works everywhere. No more 'works on my machine' for database queries

Git-Friendly Storage

Collections save as JSON/YAML. Commit them. Review them in PRs. Version them properly

Parallel Execution

Mark operations as independent, they run simultaneously. Because waiting is overrated

Actual Testing

Write test assertions for your queries. Test database operations without mocking everything

Pure Rust Performance

Not Electron! Native performance. Starts in under 200ms, uses 30MB of RAM

Let's Address the Elephant in the Room

"But I can just write a script!"

You absolutely can. And if you're doing a true one-off query, maybe you should. But here's what I've learned from seeing hundreds of "one-off" scripts:

They're never one-off

Someone will need it again in 3 weeks

They become tribal knowledge

"Ask Sarah, she has a script for that"

They don't scale with the team

New engineers recreate the same scripts

They're not discoverable

Good luck finding that script from 6 months ago

Query Requests aren't about replacing every script. They're about building a library of operations your whole team can use, modify, and depend on.

Who This Is Really For

You, if you're tired of writing the same boilerplate

Stop copy-pasting DynamoDB client initialization code.

Your team lead who wants consistent debugging tools

Give everyone the same powerful queries instead of random scripts.

The on-call engineer at 3 AM

They'll thank you when they can run pre-built queries instead of writing code half-asleep.

The new engineer who joined yesterday

They can be productive immediately with your query library.

Not for you if you enjoy maintaining script folders

Some people like pain. We don't judge.

The Bottom Line

Every hour you spend writing DynamoDB scripts is an hour not spent shipping features. We built Query Requests because we got tired of watching great engineers waste time on solved problems.

The math is simple:

30 min

Average script writing time

20 min

Average script debugging time

2-3x

Times used before being forgotten

That's 50 minutes of senior engineering time for something used twice.

At $150/hour, each script costs $125 to create and saves maybe $50 of future time.

Net loss: $75 per script

With Query Requests, that same operation takes 5 minutes to build and can be used hundreds of times by your entire team.

Try It Yourself

Look, I could write another 1000 words about features, but you know what works better? Just trying it.

Grab that annoying cross-table query you've been putting off. Build it as a Query Request. See how it feels.

If it doesn't save you time, uninstall it and I'll personally send you a coffee card for wasting 10 minutes of your day.

No credit card • No sales calls • Just better DynamoDB queries

Quick Questions I Know You Have

"How's the performance?"

Negligible overhead. We're talking 5-10ms on top of DynamoDB's response time. Your network latency varies more than that.

"Does it work with single-table design?"

Absolutely. Actually works better since you're constantly querying multiple entity types.

"What about DynamoDB Local?"

Works great. Same with LocalStack. If it speaks DynamoDB API, we support it.

"Is this another Electron app?"

🤮 Hell no! Pure Rust, native performance. Starts in under 200ms, uses 30MB of RAM. Because your database tools should be faster than your database.

Ship better features, not more scripts.

— The Dynomate Team